Containers have moved from early-adopter novelty to mainstream infrastructure. In the Cloud Native Computing Foundation’s (CNCF) most recent survey, well over ninety percent of enterprise respondents run containers in production.

For technology leaders that statistic matters because containerization is now the fastest way to ship reliable software at scale. A container bundles an application with everything it needs to run, so the build that passes QA on Friday is the exact build that goes live on Monday, with no “works on my machine” surprises.

The Promise of Containerized Applications

That consistency does wonders for Continuous Integration and Continuous Delivery. Your teams can push smaller changes more often, reduce risk, and respond to market shifts without the friction of traditional release cycles.

The flip side is operational complexity and container management. Dozens or thousands of deployed containers must start, stop, scale, heal, and talk to one another across multiple clouds or data centers. That orchestration challenge is where Kubernetes enters the picture.

Kubernetes is an open-source platform, originally created at Google and now governed by the CNCF, that automates the deployment, scaling, and ongoing management of containerized applications. In practical terms it turns a fleet of individual servers, whether on-premises blades, virtual machines in AWS, or edge devices in retail stores, into a single self-healing pool of compute that follows the policies you define.

From Nodes to Kubernetes Clusters: How the Pieces Fit

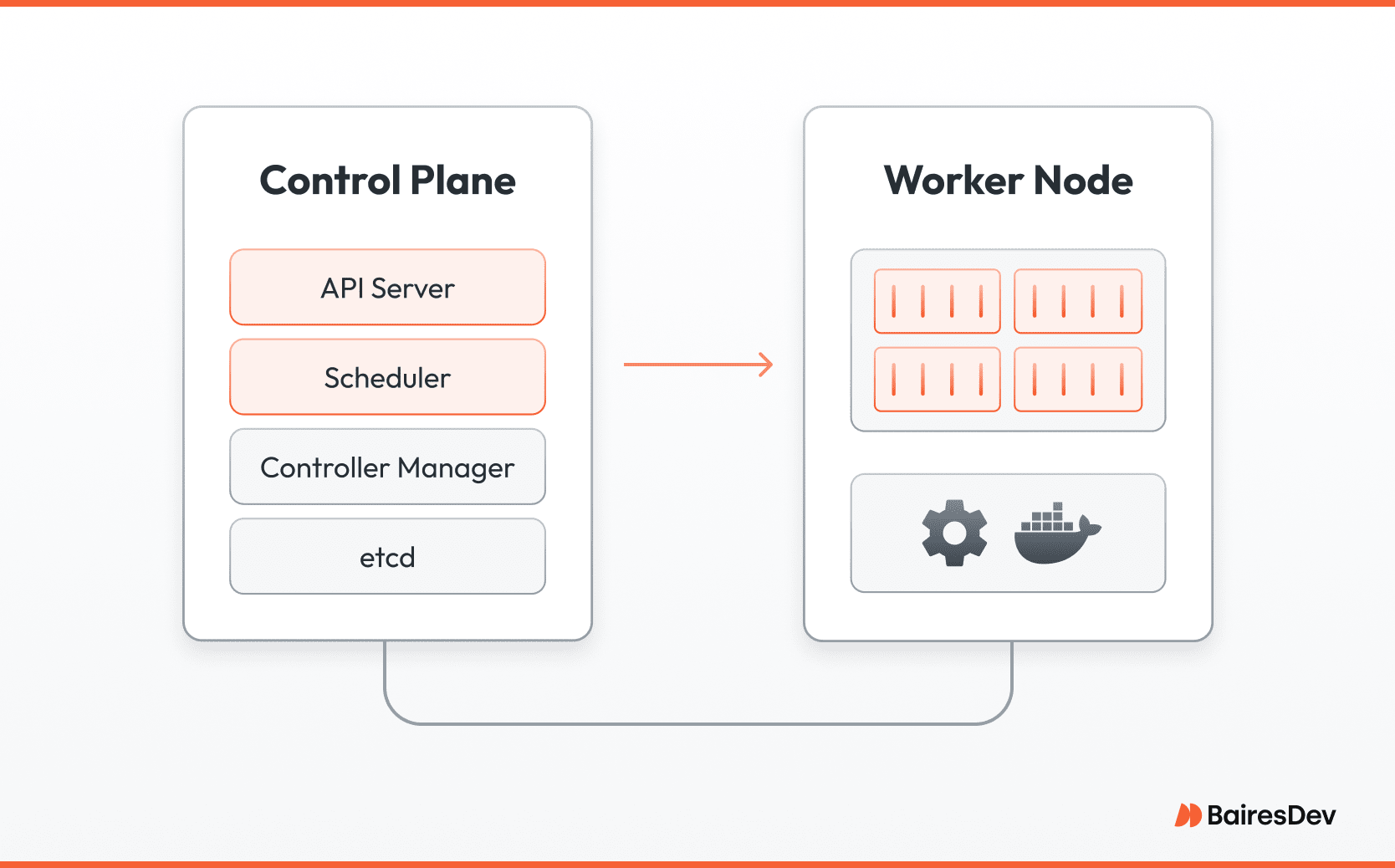

A Kubernetes cluster groups physical or virtual machines into two logical layers: the control plane and the worker nodes. The control plane hosts the API server, a scheduler that assigns workloads to nodes, and a set of controllers that ensure the real-time state of the system matches the state you declared. A distributed key-value store called etcd keeps that desired state durable and highly available.

Worker nodes run a lightweight agent, the kubelet, alongside a container runtime such as containerd. When the scheduler says “run three instances of the payments service,” those nodes pull the correct container image, launch it inside a pod, the smallest deployable unit in Kubernetes, and wire networking and storage automatically. If a node fails, the Kubernetes control plane reschedules those pods elsewhere within seconds, with no operator intervention.

Specialized extensions called operators push this model further. A Kubernetes operator encodes the day-two knowledge normally held by a senior engineer, backup rotations for data storage, graceful version upgrades for a message queue, horizontal sharding rules for a time-series store. The platform applies that knowledge consistently, 24 hours a day, 7 days a week. Prometheus for monitoring and Elastic for search are two well-known examples already relied on in production.

Power and Responsibility

Standing up a proof-of-concept Kubernetes cluster is easy; every major cloud offers a managed option. Running Kubernetes responsibly in production is where the learning curve appears.

Each application requires a deployment manifest written in YAML. That document specifies CPU requests, memory limits, network ports, security policies, storage classes, and more. A misconfigured manifest can inflate your cloud bill or, worse, starve a revenue-critical service of resources during a traffic surge.

The larger the deployment, the more intricate those manifests become. Teams need clear standards, automated validation, and continuous cost visibility. They also need updated observability pipelines because containers are ephemeral by design. Logs, metrics, and traces must follow the workload wherever the scheduler moves it, or troubleshooting grinds to a halt.

Because of that complexity, Kubernetes success is as much about people as it is about technology. Organizations that treat it as a platform supported by platform engineers, site-reliability specialists, and well-trained developers, see faster delivery cycles and stronger reliability. Organizations that hand it to a lone administrator often discover they have shifted risk instead of reducing it.

The Serverless Frontier

Not every team wants to think about nodes at all. Serverless Kubernetes abstracts the underlying machines so you pay only for the CPU and memory a pod actually uses.

Frameworks such as Knative add scale-to-zero and event routing, letting developers push code and have the platform provision resources on demand. For bursty or seasonal workloads this model can cut costs dramatically, though it trades some low-level control for that convenience.

By automating the operational heavy lifting that once demanded specialized scripts and round-the-clock attention, Kubernetes frees senior engineers to focus on product features and customer value. For leaders balancing innovation with reliability and cost discipline, that benefit is the reason Kubernetes now sits at the heart of modern software delivery.

Kubernetes Architecture

Picture your digital estate as a busy airport. The control plane is Air Traffic Control. It sees services, decides which runway or server each one will use, and keeps overall traffic flowing. The worker nodes are the gates and runways where passengers actually board the planes. Each layer has a defined job, and together they create a safe, always-on environment for your applications.

Inside the control plane

API Server: The single front door. Every command from a developer, pipeline, or monitoring tool comes through this endpoint, which then routes the request to the right sub-system.

Scheduler: Thinks several steps ahead. The Kubernetes Scheduler looks at resource availability, security rules, and placement policies before assigning new workloads to nodes.

Controller Manager: Keeps the cluster honest. It continuously compares the real-time state to the desired state stored in etcd, a fault-tolerant key–value store, and corrects any drift within seconds.

Inside a worker node

Container Runtime: The engine that actually starts and stops containers. Most companies today use containerd or Docker.

Kubelet: A lightweight agent that receives orders from the control plane, launches requested containers inside a pod, and reports back on health and performance.

Because every node runs the same small set of services, the cluster can grow from three servers in a lab to hundreds across multiple regions without changing its operating model. If a node drops offline, the control plane notices and reschedules its pods elsewhere before users feel a blip.

Kubernetes also supports operators, plug-in controllers that encode day-two runbooks for complex software like databases or message queues. Instead of calling an engineer at 10 p.m. to add replicas or apply patches, a Kubernetes operator performs the task automatically according to policy.

Container Orchestration with Kubernetes

Orchestration is where the platform proves its worth to the business. A few highlights:

Service discovery: Every new Kubernetes service gets a DNS name and an internal IP the moment it starts. Developers do not exchange spreadsheets of port numbers, and rollouts no longer break because of outdated endpoints.

Load balancing: Traffic is distributed across healthy instances, which protects customer experience during peak demand. If a pod fails, the platform routes around it before the next request lands.

Persistent storage: Kubernetes links containers to block, file, or object storage without exposing the underlying hardware. Data stays put even if the pod that uses it moves to a different node.

Self-healing: If a process crashes or a node disappears, Kubernetes restarts the workload automatically. That reduction in manual intervention is a direct lift to uptime and to the productivity of senior engineers.

Collectively, these capabilities drastically improve infrastructure management, allowing a small platform team to replace dozens of specialists.

Kubernetes Deployment and Management

A Kubernetes deployment begins with a manifest written in YAML. Think of the manifest as both blueprint and contract. It states how many copies of a service must run, how much CPU, memory, and storage resources each copy may consume, which container image to pull, and what security rules apply.

These manifests define Kubernetes objects, which are the building blocks of the platform. Each object represents a specific part of your application’s desired state: what should run, how it should behave, and how it should be exposed or secured. Once the manifest is committed, Kubernetes enforces it continuously.

The leading Kubernetes objects and components are:

Deployment: Handles rolling updates and rollbacks. New versions are introduced gradually. If error rates climb, the platform can revert to the previous release on its own.

Service: Provides a stable virtual IP that fronts any set of pods. Because the address never changes, downstream applications stay connected even while pods scale up, scale down, or move.

Pod: The smallest runnable unit. One pod may host a single microservice, or it may combine closely related containers that must share resources.

Node: A physical or virtual machine that offers compute, memory, and local storage. Nodes can sit in your data center, in a public cloud, or at an edge location, yet they all follow the same operating rules.

Modern clusters also integrate admission controllers, cost monitors, and policy engines. These add-ons enforce budget ceilings, ensure images come from trusted registries, and confirm that sensitive workloads land only on approved hardware, all without slowing developer throughput.

By abstracting day-to-day infrastructure decisions into a consistent and automated layer, Kubernetes lets engineering talent focus on revenue-driving features instead of firefighting servers. For leadership, that translates into faster delivery, higher availability, and clearer control over spend.

What Your Developers Need to Know Before You Commit to Kubernetes

A modern Kubernetes project starts with more than just learning a few CLI commands. Your engineers need a working grasp of container fundamentals, how images are built, shipped, and verified, plus a comfort level with Linux because ninety-nine percent of clusters run there. From that base they step into Kubernetes-specific disciplines: writing clean YAML manifests, setting CPU and memory limits, choosing the right container runtime, and following control-group best practices so one service never starves another.

They also must learn the ecosystem that surrounds the core platform. Helm for repeatable deployments, service meshes such as Istio for zero-trust networking, and role-based access controls that keep production and test traffic safely separated. Observability changes as well; logs, metrics, and traces become distributed data that need a pipeline built for ephemeral workloads. Finally, automation becomes the rule, not the exception. Continuous Integration systems build and scan images, admission controllers enforce policy at the API layer, and GitOps workflows turn pull requests into production rollouts.

Most importantly, everyone on the delivery path needs a shared mental model: developers, site-reliability engineers, platform owners. Kubernetes clusters operate on the principle of desired state. You declare how many replicas, how much compute, and which network rules to apply, then let the control plane reconcile reality against that declaration. Development and operations teams that still think in monolithic terms often over-provision, under-secure, or misplace critical data volumes.

Why the Right Team Structure Matters

Kubernetes success seldom comes from a single administrator working in isolation. Leading organizations build a cross-functional platform team that owns the cluster, publishes reusable templates, and coaches application squads on best practices. That model reduces duplication, enforces compliance, and crucially, lets product engineers stay focused on business features rather than YAML syntax.

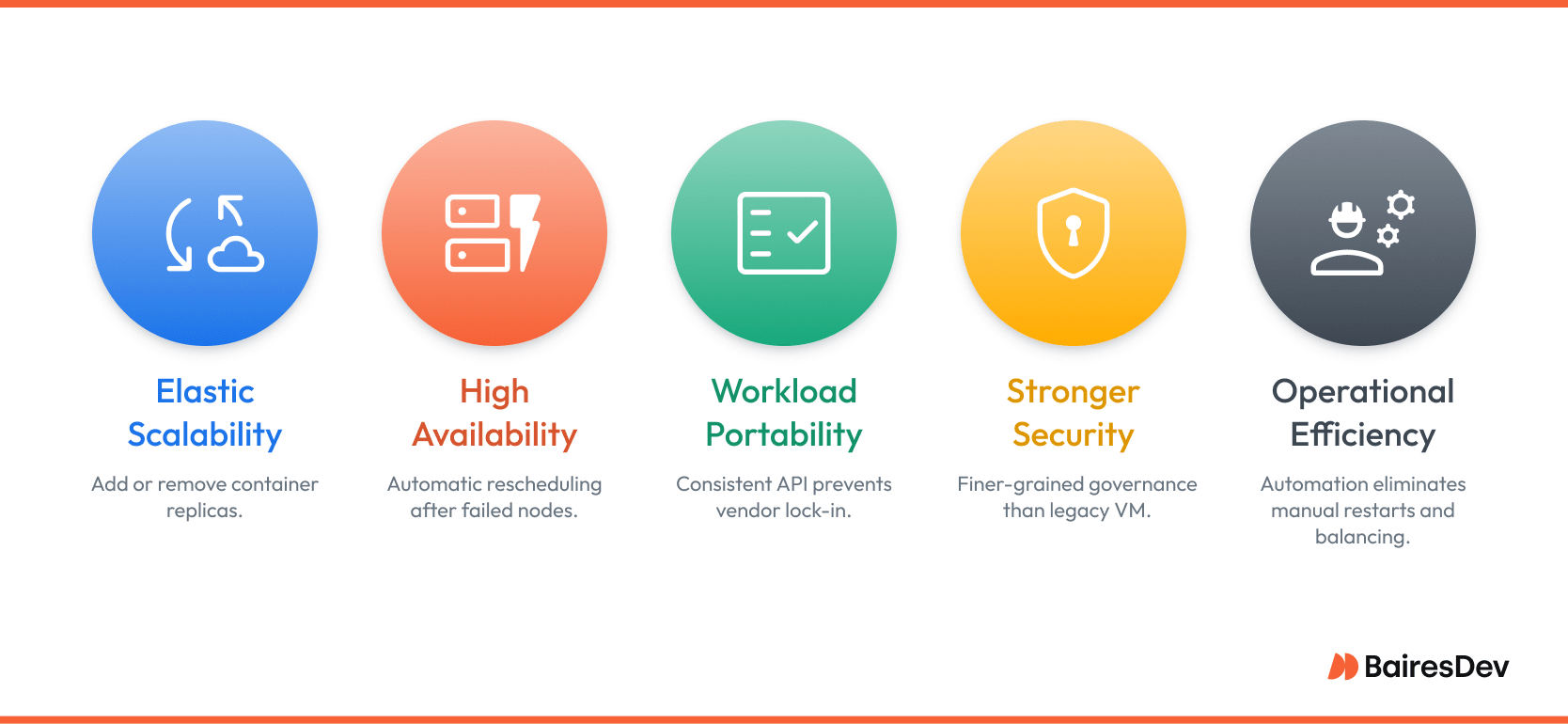

Business-Level Benefits You Can Expect

- Elastic scalability: The platform adds or removes container replicas in seconds, keeping performance steady during traffic spikes and lowering cloud spend when demand falls.

- High availability by design: Failed nodes and crashed processes trigger automatic rescheduling, which means fewer customer-visible incidents and faster recovery times.

- Workload portability: Whether you deploy on-prem, in a single cloud, or across several, Kubernetes offers a consistent API and operating model that prevents vendor lock-in.

- Stronger security posture: Role-based access controls, network policies, and secrets management give you finer-grained governance than legacy VM tooling.

- Operational efficiency: Automation eliminates manual restarts, manual load balancing, and late-night patch marathons, freeing senior engineers for higher-value work.

The Business Case for Kubernetes

Containers are already the default unit of software delivery. The question is how efficiently your organization can run them at enterprise scale. Kubernetes provides the container orchestration power to manage that complexity. It is self-healing, policy-driven, and proven in production. A well-prepared team turns that capability into faster releases, lower downtime, and measurable cost control. With the right expertise and governance in place, Kubernetes transforms infrastructure from a constraint into a competitive advantage.

Frequently Asked Questions

How do we measure the ROI of adopting Kubernetes?

Focus on three metrics: time-to-market (release frequency and lead time), incident minutes per quarter, and total infrastructure cost per user or per transaction. Most enterprises see a drop in lead time and a double-digit decline in unplanned downtime within the first year, offsetting migration expenses.

How do we track resource allocation and prevent cloud costs from spiking?

Implement resource requests and limits, set cluster-wide budget alerts, schedule unused development nodes to shut down automatically, and map spend to teams with Kubernetes cost-allocation tools like Kubecost or Cloud Provider Cost APIs. Many firms recoup 15 to 25 percent of compute spend once these controls are active.

Which workloads should not move to Kubernetes right away?

Ultra-low-latency trading engines, highly specialized hardware jobs (GPUs without mature drivers), and tightly coupled monoliths larger than a single node often stay on dedicated hosts until refactoring or ecosystem maturity catches up.

What staffing model keeps a production cluster healthy without overhiring?

A lean platform team of three to five engineers can operate clusters supporting dozens of product squads, provided you automate builds, policy enforcement, and incident response. Supplement with a site-reliability rotation for peak periods instead of assigning a full SRE headcount per service.

How does Kubernetes enable a hybrid or multicloud strategy?

The Kubernetes API remains identical whether the nodes run on-prem, in AWS, Azure, or GCP. You can use a global control plane or federation layer to deploy services across providers, then route traffic with a cloud-agnostic service mesh. This approach minimizes vendor lock-in and supports regional fail-over.

What is the safest migration path from virtual machines to Kubernetes?

Start with stateless front-end or API services, containerize them, and deploy behind a Kubernetes ingress. Gradually peel off stateful back-ends, using operators for databases where possible. Parallel-run old and new environments behind the same CI/CD pipeline to keep rollback options open.