I’ve always been a sci-fi geek. The idea of having fully conscious computers, Asimov’s Daneel Olivaw, and Star Trek’s Data with their positronic brains all shaped the way I viewed computing and AI. It’s so exciting to get to live in a world where Artificial Intelligence is growing, where those stories are coming to life little by little.

Machine Learning is everywhere, and with the copious amount of data at our disposal, the models we are building are incredibly rich and complex. Faster communication and bigger processing have led to an AI revolution.

Recommendation algorithms, forecasts, image, and voice recognition tools are just a small sample of the kind of technology that’s getting developed every single day. In fact, you’d be amazed by the amount of machine learning tools that are influencing your life at this very moment.

Artificial intelligence isn’t infallible. Just like a human, they can make mistakes and they can even forget. In the case of neural networks, forgetting can be a disaster, akin to having a sudden case of amnesia.

What Is a Neural Network?

Neural Networks are a very popular form of machine learning that is widely used for predictions. The name comes from the fact that the system tries to emulate the way neurologists believe the human brain learns new information.

While the underlying maths is quite involved, the principle is simple. Imagine that you have a series of mathematical equations linked by pathways, much like how neurons are intertwined into brain tissue.

Much like how the brain reacts when a phenomenon is perceived by the senses, a neural network is triggered when it’s fed information. Depending on the nature of said information, some pathways activate, while others are inhibited.

The process ends with an output node that provides a new piece of information, such as a prediction. Let’s say that you perceive a four-legged animal: this triggers brain activity as information is processed until you conclude that you are seeing a dog instead of a cat.

Just like humans, the ability to tell a dog apart from a cat has to come from somewhere. We have to train a Neural Network with data so it can learn. This is done during the training phase by feeding it prebuilt datasets, for example, a series of pictures with a name tag such as “cat” or “dog”.

Afterward, we use another sample set to test the training. This is called the prediction phase. Think of it like a test a student takes after learning about animals. If the network has a good prediction rate, then it’s ready for deployment.

How Neural Networks Forget

Of course, after we learn about cats and dogs, we learn about deers, cows, bulls, plants, and we develop the skills to tell them apart. That’s what we call “plasticity”, the ability for humans to keep growing and learning.

Neural Networks, on the other hand, are rather limited in this regard. For example, in a very famous study by McCloskey and Cohen, the researchers trained a Neural Network with a series of 17 problems that contained the number one (for example, 1+9 = 10).

After thoroughly testing the results, they proceeded to feed the machine a series of additional problems, this time with the number 2. As expected, it learned how to solve the problems with the number 2, but it forgot how to solve the problems that contained the number 1.

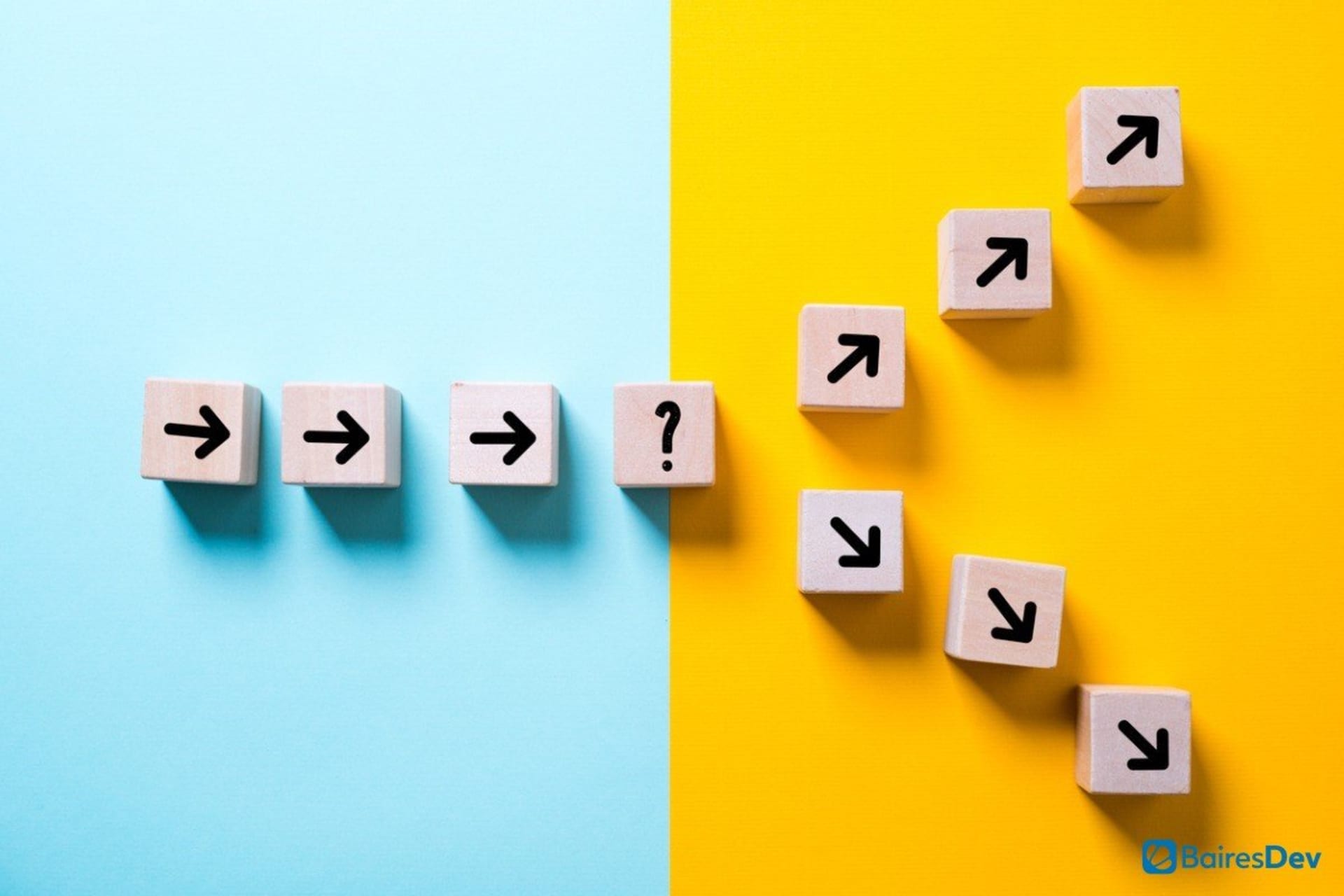

What happened? In short, the neural network dynamically creates the aforementioned pathways between nodes during the training phase. These pathways are built from the data that’s being fed to the machine.

When you feed it new information, new pathways are formed, sometimes causing the algorithm to “forget” the previous tasks it was trained for. Sometimes, the margin of error increases, but other times, the machine completely forgets the task. This is what’s called Catastrophic Forgetting or Catastrophic Interference.

Is Catastrophic Forgetting an Issue?

For the most part, no, most current Neural Networks are trained with guided learning. In other words, the engineers handpick the data they feed to the network to avoid possible biases and other issues that could arise from raw data.

But as Machine Learning becomes more sophisticated, we are reaching a point where our agents can have autonomous continual learning. In other words, neural networks can keep learning as they go through new data without having to be supervised by humans.

As you might have already figured out, one of the biggest risks of autonomous learning is that we aren’t aware of what kind of data the network is using to learn. If it decides to work with data that’s too far removed from its basic training it could lead to Catastrophic Interference.

So, we just need to avoid autonomous networks, right? Well, not quite. Remember the previous study we mentioned? The new sets of tasks weren’t all that different from the original set, but it led to Interference.

Even similar sets of data can trigger a Catastrophic Interference. In fact, we can’t be sure until it happens. The layers between input and output of a neural network (called hidden layers) are a bit of a black box, so we don’t know if the data is going to break a critical pathway and cause a collapse.

Can It Be Avoided?

While the risk of Catastrophic Forgetting will never go away, it’s a rather tame problem. From a design perspective, there are dozens of strategies to minimize the risk, such as Node Sharpening or Latent Learning.

From a strategic perspective, creating a backup before re-training a network is a great way to have a safeguard in case something goes wrong.

Another common approach is to train a new neural network with all the data at the same time. The problem only happens with sequential learning, when new information disrupts what the network has previously learned.

The Long Road Ahead…

Catastrophic Forgetting is but one of the many problems machine learning experts are tackling. While the potential of artificial intelligence is amazing, we are still learning and experimenting. Intelligence, artificial or natural, has never been a simple problem to tackle, but we are taking huge strides towards better understanding it.

Machine Learning is a fantastic field, not only for its uses but also because it’s making us question our nature as human beings. Think about it. For Turing, the key test was creating a machine that one couldn’t distinguish from a human.

But to figure out if that’s the case, first, we have to be able to answer one simple, yet evading question. What does it mean to be human? Artificial Intelligence is a mirror, a reflection of ourselves. Partnering with software development outsourcing professionals can help navigate these complex challenges more efficiently.

If you enjoyed this article, check out one of our other AI articles.

- AI Adoption in Software Development – An Insider Perspective.

- The Ethics of AI: A Challenge for the Next Decade?

- AI Myths and Job Security: Will Robots Take Our Jobs?

- AI and Machine Learning Software Testing Tools in Continuous Delivery

- The Hurdles of Implementing AI and Robotics in the Healthcare Industry

- Personalized Learning: How AI Is Shaping the Future of Education