According to KPMG, about 61% of people aren’t sure they can trust AI systems. Trusting AI means being confident about its decisions and feeling okay sharing data to help it improve. But many people worry about three risks: AI compromising our privacy, harming society, or that it just hasn’t been tested enough to be reliable.

So, why is trust such a big deal? Well, if people don’t trust AI, they won’t use it. And if they don’t use it, we miss out on the data and feedback needed to make it better. Plus, trust leads to more investments in research. That’s how we get AI solutions into fields like education and healthcare where they can make an enormous impact. But without that trust, AI progress stalls, and we lose out on all the ways it could improve our lives.

Why Does Trust in AI and Measuring it Matter?

Without trust, even the best AI systems don’t get used, and all that potential goes to waste. Think about it: AI is projected to contribute $15.7 trillion to the global economy by 2030. It means more jobs, brand-new industries, and a serious boost in productivity. Take finance, for example—AI can flag fraud faster, protect customer accounts, and streamline operations, but only 9% of finance teams are scaling AI projects right now. Why? Because people are hesitant to rely on systems they don’t feel confident in.

Building trust isn’t about flashy demos or tech jargon—it’s about proof. People need quantifiable results (like fraud detection rates in banking or diagnostic accuracy in healthcare) to believe these systems work. Regular assessments help spot risks like biases or inaccuracies and show how AI systems perform. It comes down to demonstrating that the tech is transparent, ethical, and effective.

As your team dives into AI initiatives, it’s key to get a sense of how users feel about trusting AI. Luckily, there are plenty of ways to measure and boost that confidence.

Confidence Scores: A Quick Measure of User Trust

Confidence scores describe how sure an AI system is about its answer. If it says there’s an 85% chance it’s right, the confidence score is 85% (or 0.85). These scores come from probabilities calculated based on the AI’s training data and how likely different outcomes are. Here’s the thing—when the confidence score matches the actual accuracy, people trust the system. But if it keeps overestimating, like saying it’s 95% sure but only being right half the time, users lose trust, fast.

How Confidence Calibration Works

To fix that mismatch, confidence calibration is used to make sure the confidence scores actually mean something. Here’s how it goes:

- Test the model: Run it on tasks where we already know the right answers.

- Compare scores and accuracy: Check if the confidence numbers line up with how often the AI gets it right.

- Tweak the system: Use techniques like Platt scaling to adjust how the AI assigns confidence.

Confidence Scores Matter: A GPT-4 Case Study in Healthcare

In a recent study, researchers tested GPT-4’s ability to answer medical questions. Overall, it performed well but sometimes got overconfident, even when it was wrong. With feedback, it had 0.9 confidence and 88% accuracy; without feedback, confidence hit 0.95 with 92% accuracy. The big takeaway? While it’s impressive, we need to be careful. In critical fields like healthcare, confidence scores need to align with reality so people know when they can actually trust and rely on the system.

Error Rate Analysis: Identifying and Addressing AI Failures

Error rates in AI show how often the system “messes up” and what kind of mistakes it’s making. This helps set expectations for users and figure out when we can trust the system. Let’s look at a breakdown of the key types:

- False Positive Rate: This happens when the AI wrongly flags something as positive. In healthcare, this could mean diagnosing a patient with a disease they don’t have. It creates unnecessary panic and harms trust.

- False Negative Rate: This rate occurs when the AI misses something important, like a security system failing to identify a cyberattack. This can have serious consequences and further erode trust.

- Accuracy: This measurement evaluates the system’s overall performance. Higher accuracy means better reliability and builds trust.

- Precision and Recall: Precision looks at how many positive predictions were correct, while recall measures how many true positives the AI catches. Both are key for balancing relevance and coverage.

How Error Rate Analysis Works in Real-Life

Take something like a streaming platform. Its recommendation system measures the following:

- Precision: It checks how often recommended shows are actually watched by users. If the AI recommends something and the user enjoys it, that’s a win.

- Recall: This rate makes sure the AI suggests a broad range of shows to cover different user interests.

If the platform recommends a horror movie to someone who hates horror, that’s a false positive. If it misses a trending show the user would love, that’s a false negative. Regularly tracking these metrics and addressing high error rates (through better training data and optimizing algorithms) keeps users happy and builds trust.

Explainability Metrics: Making AI Decisions Understandable

Explainability metrics show us how AI systems arrive at their decisions. They answer questions like, “Why did the AI make this choice?” Explainability tools find errors or biases in the logic and give users a clearer understanding of why they got a specific result. When people know how AI works, they’re way more likely to trust and use it.

Tools for Explainability Metrics

Here are some of the most common tools:

- SHAP (Shapley Additive Explanations): SHAP breaks down how much each input affects the AI’s decision. Say someone’s loan application isn’t approved—SHAP might show that “low income” was the biggest factor. It’s great for understanding what tipped the scales.

- LIME (Local Interpretable Model-Agnostic Explanations): LIME simplifies complex predictions. For example, if an AI recommends a product in an e-commerce store, LIME can explain why, like “users who bought this also bought similar items.” It makes those recommendations less random.

- Counterfactual Explanations: This one’s very useful—it shows what would’ve changed the outcome. If someone’s credit card application is denied, a counterfactual might say, “If your income were $3,000 higher, you’d be approved.” It’s actionable and easy to understand.

These tools make AI decisions more transparent, which is a big win for trust and usability. Watch the video below for a deeper dive into explainable AI.

Trustworthiness Assessment Frameworks: Building Comprehensive Trust Models

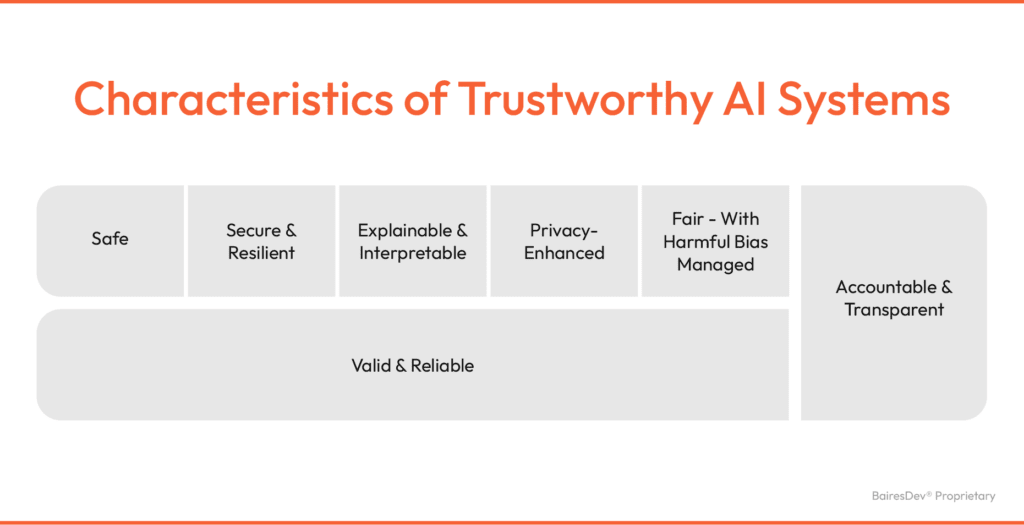

Trustworthiness assessment frameworks are guidelines to check if an AI system is reliable, ethical, and fair. They focus on factors like transparency, privacy, and bias—like a checklist to make sure the system behaves responsibly and meets user expectations. The following are a few frameworks to consider:

- European Commission AI Ethics Guidelines: This framework is all about making AI lawful, ethical, and robust. It covers both the technical side, like testing and validation, and the non-technical side, like regulations and certifications, to make sure the system is trustworthy.

- NIST AI Risk Management Framework: NIST’s framework focuses on identifying risks like privacy and environmental impacts. It lays out clear steps to reduce these risks and defines responsibilities for teams and users to facilitate transparency and accurate data handling.

- Microsoft Responsible AI Framework: Microsoft’s approach digs into the unique risks AI brings. It focuses on accuracy, reducing stereotyping and bias in data, and making the system inclusive and safe to use.

Why Do These Frameworks Matter?

These frameworks point out biases, make AI decisions easier to understand, and keep sensitive data safe. All of this boosts user confidence and encourages people to actually use the tech.

How to Implement Trust Measurements and Frameworks

If you’re building an AI system for your company, making it reliable for users is a big deal. Here’s how you can make it happen:

- Define Clear Goals: Set clear goals, such as having the system hit 90% accuracy in loan decisions and keeping false negatives (like rejecting qualified applicants) below 5%. Hitting specific goals gives users confidence that the AI’s decisions aren’t random.

- Track Error Rates: Use automated reports to gather data on false positives and false negatives. For example, make sure the AI isn’t approving unqualified applicants (false positives) or rejecting good ones (false negatives). If the rates are off, retrain the system with better data.

- Use Frameworks as a Guide: Go with a framework like NIST or take some cues from the EU AI Ethics Guidelines to keep things fair and secure. The EU’s might be a bit outdated, and Microsoft’s is proprietary, but both have solid ideas for managing risks.

- Get Feedback: Ask users what they think—surveys, focus groups, or app analytics can help you find gaps. Use their input to tweak the model and make it better.

Who’s on the Team?

Building trust in AI takes a team effort. These are the main players tasked with building trust in AI at a technical level.

- AI/ML Engineers: Set up confidence scores and error tracking.

- Data Scientists: Refine the model with better data.

- Compliance Officers: Make sure everything’s ethical and legal.

- UX/UI Designers: Make trust metrics easy to understand for users.

- Legal Teams: Handle privacy and security compliance.

- Product Managers: Keep the AI aligned with company goals and user needs.

If you’re missing any of these roles or your team doesn’t quite have the expertise to handle some of these challenges yet, we can help. Our staff augmentation services can bring in the experts you need to round out your team and build an AI solution you can trust.

Conclusion: Building the Future of Trustworthy AI

Building trust in AI isn’t just about the tech—it’s about making systems people actually feel good using. To get there, you need to nail a few things: set clear trust metrics, keep tabs on error rates, use tools like SHAP to explain decisions, and follow ethical frameworks to keep it fair. And don’t forget, AI isn’t set-it-and-forget-it. You’ll have to recalibrate regularly to avoid overconfidence or skepticism.

If you’re a CTO, CIO, or tech lead, now’s the time to act. Get these trust-building strategies in place, and not only will adoption rates climb, but you’ll also show your company knows how to do AI the right way. Together, we can help you build custom AI systems that people trust—and deliver real-world results.