Prompt engineering is a field that’s gaining traction fast, but what exactly is it? In its simplest form, prompt engineering involves designing and optimizing prompts to effectively train and use artificial intelligence models.

Now, imagine you’re trying to teach your pet a new trick. You wouldn’t just throw them into the deep end without any guidance. You’d give them clear instructions or “prompts” to help them understand what they need to do. The same principle applies to AI models.

The complexity arises when these AI models don’t understand context or nuance in the same way we do. Therefore, creating effective prompts requires a solid understanding of both machine learning principles and human language constructs. It’s like being bilingual, but instead of speaking French and English, you’re fluent in human and AI.

Let’s use an example to clarify. Suppose we want our AI model to generate jokes about cats. A poorly designed prompt might be “Tell a joke.” This could result in anything from knock-knock jokes to dark humor—it’s too vague! A better prompt might be “Generate a light-hearted joke involving cats.” This provides more specific parameters for the AI model to work with.

You might deem it easy to become good at prompting, but it’s proven to be a science in itself. Prompt engineers have specialized skills and knowledge in software development, AI, and machine learning. With a rising demand for these growing technologies, prompt engineering is surging as a high-earning position with an average of six figures per annum.

The Evolution of Prompt Engineering: A Historic Overview

As we matured in our understanding of AI, we realized that the “garbage in, garbage out” principle applied here too. The quality of input (prompts) directly influenced the quality of output. We’ve gone from simple rule-based systems to complex machine learning models that can generate humanlike text. It’s not just about creating better prompts. It’s also about optimizing them for efficiency and effectiveness.

Take for example the development of GPT-4 by OpenAI. This language prediction model is capable of generating coherent and contextually relevant sentences based on a given prompt. However, even with a massive amount of parameters, it still requires carefully crafted prompts to perform optimally.

If you give GPT-4 (or 5 in the near future) a vague prompt like “Write an essay,” it might churn out an eloquent piece on quantum physics or existential philosophy—interesting topics indeed, but probably not what you had in mind!

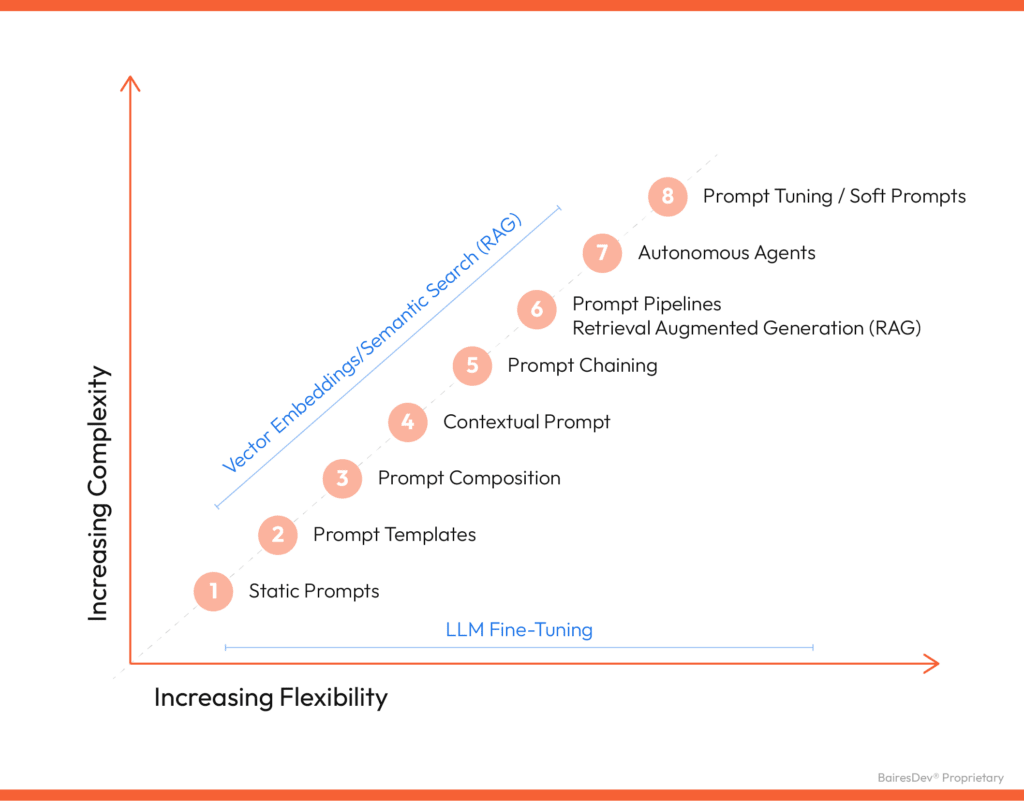

The following is a broad overview of the many phases of prompt engineering considering complexity and flexibility. As prompts become more sophisticated and targeted, engineers in this field are expected to understand and work with this spectrum—from static prompts (simple and fixed instructions) to soft prompts (more flexible and nuanced instructions) and beyond.

Relevance of Prompt Engineering in Today’s Tech-Driven World

As AI continues to permeate every sector, from healthcare to finance and beyond, the demand for fine-tuned prompts is skyrocketing. Let’s take a look at customer service chatbots. They’re designed to handle an array of customer queries efficiently. Without well-engineered prompts, they can quickly transform from helpful assistants into sources of frustration.

Imagine that a customer asks about their order status. A poorly crafted prompt might result in the bot responding with irrelevant information like promotional offers or product details. An optimized prompt ensures that the bot understands the query correctly and provides accurate order status details.

Prompt engineering is indeed crucial in an AI-powered world. Its importance will only continue to amplify.

Educational Requirements to Become a Prompt Engineer

This field is multidisciplinary. Although there are a few courses and certifications available to prepare someone for this specific career path, there are several relevant fields of study that can provide a solid foundation too. We’re talking about computer science, data science, linguistics, and even psychology.

Crafting effective prompts requires not only an understanding of AI algorithms and programming languages but also an appreciation for human language patterns and cognitive processes (cue linguistics and psychology). Data science skills are equally important, allowing us to analyze user responses and refine our prompts accordingly.

Let’s imagine we’re designing prompts for a medical chatbot. A background in computer science would enable us to program the bot effectively, and knowledge of medical terminology (a subset of linguistics) would ensure our bot communicates accurately with users. Psychology would help us understand how patients typically express their symptoms or concerns, allowing us to tailor our prompts for maximum clarity and empathy. Lastly, data science skills would allow us to evaluate the bot’s performance over time and make necessary adjustments.

Key Skills Necessary for Success in Prompt Engineering

- Programming languages: Proficiency in languages like Python or Java is paramount. These are the tools of this trade, allowing construction and fine-tuning of AI systems.

- Data analysis: This involves understanding statistical methods and machine learning algorithms, which helps extract meaningful insights from user responses.

- Linguistic competence: This skill helps ensure that prompts resonate with users on a deeper level. It’s about understanding language nuances, idiosyncrasies, and cultural contexts.

- Emotional intelligence: This ensures prompts that elicit accurate responses while maintaining empathy and respect for the user’s context.

- Creativity: While prompt engineering may seem like a highly technical field, it also requires out-of-the-box thinking to devise innovative solutions.

These are not boxes to be checked off but areas for continuous growth and improvement. So keep honing those skills and let’s shape the future of AI together!

Tools of the Trade: Essential Technologies in Prompt Engineering

At the heart of the digital toolbox are programming languages. Python and Java are often go-to choices for developing an AI system. These languages provide the flexibility and power needed to craft complex AI systems.

Machine learning frameworks such as TensorFlow or PyTorch help us train our AI models efficiently and effectively. Databases like SQL or MongoDB are also essential, as these are where we store and retrieve data. Natural language processing (NLP) tools such as NLTK or spaCy act as our language experts. They help us understand and generate human language in all its glorious complexity.

To illustrate how these technologies work together, let’s revisit our AI tutor example from earlier. We’d use Python or Java to build the basic structure of the system. TensorFlow or PyTorch would then come into play for training our AI model on relevant data stored in SQL or MongoDB databases. Finally, NLTK or spaCy would be used to ensure effective communication.

A Brief Guide to Prompt Engineering

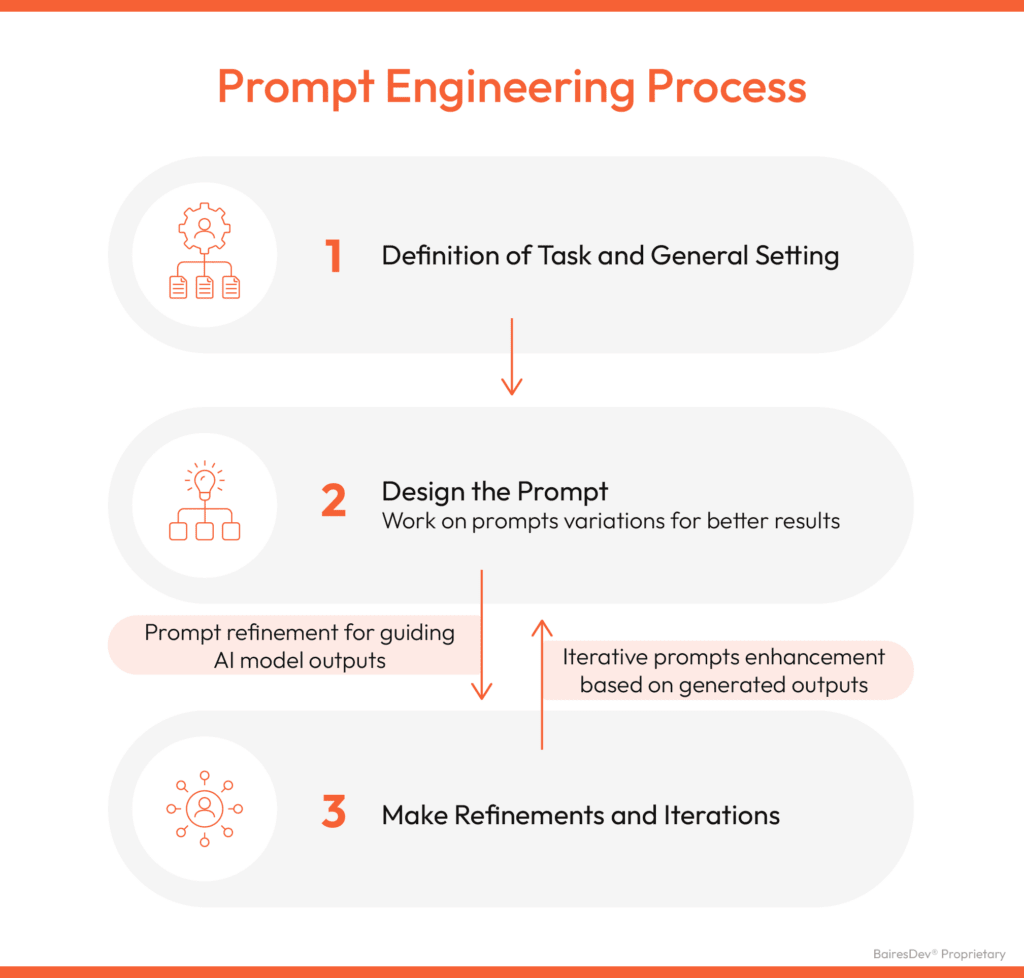

When designing prompts, we need to consider several key elements: context (the relevant background info), task specification (what exactly we want the model to do), and constraints (any limitations or rules). For instance, if we’re asking for a summary of a text, our prompt might look something like this: “Summarize the following passage in 3-4 sentences.” Clear as crystal, right?

But don’t be fooled into thinking that creating effective prompts is all about brevity and precision. We also need to be contextually aware and ready to test and iterate our designs based on the model’s responses. It’s not unlike training a new employee—you provide clear instructions, observe their performance, and then refine your approach based on their results.

Let’s now look at some practical applications of prompt engineering in NLP tasks:

- Information Extraction: With well-crafted prompts like “Extract the names of all characters mentioned in the text,” we can efficiently extract specific information from given texts.

- Text Summarization: Guiding models with prompts such as “Summarize the following passage in 3-4 sentences,” we can obtain concise summaries that capture the essential information.

- Question Answering: Framing a prompt like “Answer the following question: [question],” allows us to generate relevant and accurate responses.

- Code Generation: By providing a clear task specification and relevant context, we can guide models to generate code snippets or programming solutions.

- Text Classification: With specific instructions and context, we can guide models to perform text classification tasks such as sentiment analysis or topic categorization.

Now let’s kick things up a notch with some advanced techniques:

- N-Shot Prompting: This involves training models with limited or no labeled data for a specific task.

- Chain-of-Thought (CoT) Prompting: Here, we break down complex tasks into simpler steps or questions, guiding the model through a coherent chain of prompts.

- Generated Knowledge Prompting: We leverage external knowledge bases or generated content to enhance the model’s responses, much like referencing an encyclopedia when answering trivia questions.

- Self-Consistency: This technique focuses on maintaining consistency in language model responses, ensuring they align with previously generated content or instructions.

In conclusion, prompt engineering is more than just an interesting facet of NLP—it’s an invaluable tool for shaping and optimizing language model behavior. Through careful design and innovative techniques, we can harness the full potential of these models and unlock new possibilities in natural language processing. So why not give it a shot? You might be surprised at what you can achieve!