The threat landscape is morphing faster than you can run a vulnerability assessment. You are using a legacy SIEM with a slow incident response and endless false positives. Alert fatigue is turning your SOC brittle.

It’s tough to keep up, especially as attackers exploit zero-day attacks that static rules miss. You might spend precious quarters on endpoint security upgrades, only to watch attackers sidestep controls through credential stuffing. The solution comes from applying machine learning where it actually works.

Machine Learning in Cybersecurity

Machine learning in cybersecurity is the use of data-driven algorithms to detect and respond to emerging threats. Unlike traditional rule-based systems explicitly programmed for each risk, ML uses historical inputs to recognize patterns and adapt.

Instead of relying on fixed protocols, machine learning systems identify data patterns and flag changes in human behavior. This lets SOC operators spot attacks that can bypass conventional tools. Smart algorithms help identify signatures to predict future attacks, reducing reliance on human identification.

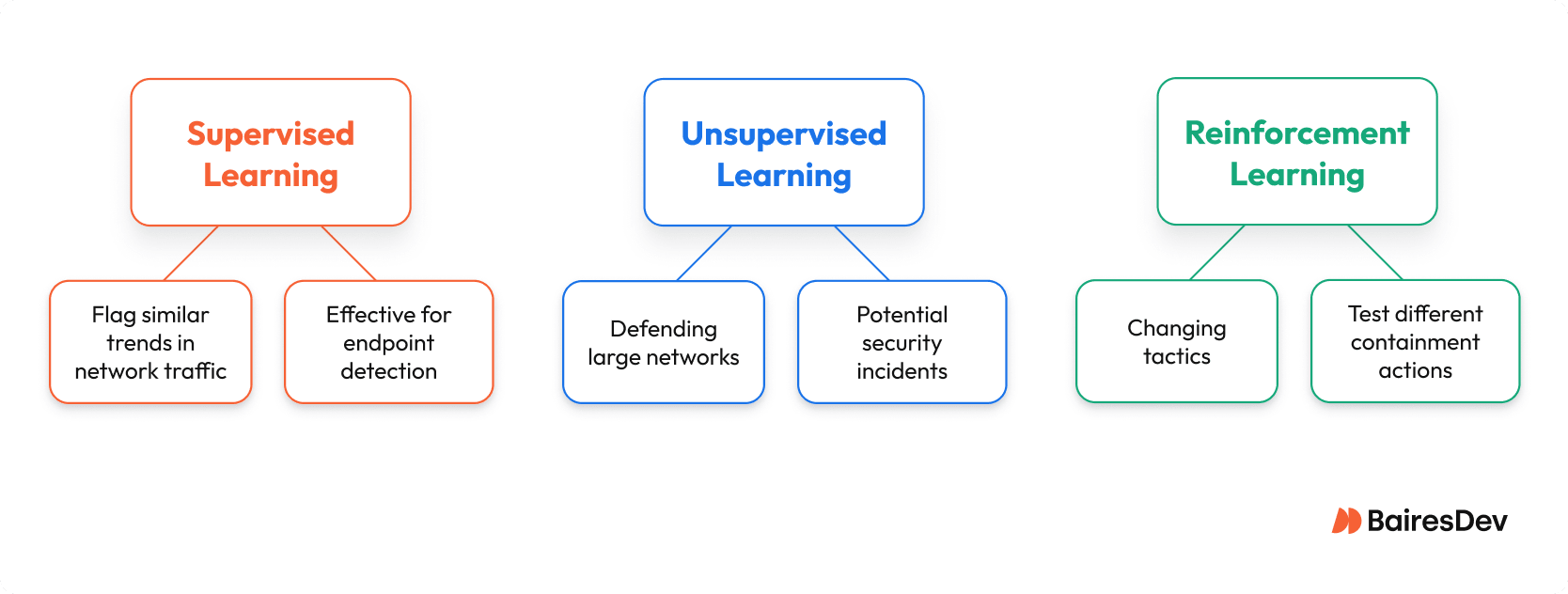

Three types of machine learning dominate cybersecurity, and each has different use cases.

Supervised Learning

Supervised machine learning is best when you have plenty of historical data labeled with examples of known malicious files or phishing attempts. These ML systems learn what’s “bad” by example, then flag similar trends in network traffic.

Supervised learning is highly effective for endpoint detection and vulnerability prioritization. Because it learns from historical examples, it excels in data-rich environments.

However, it’s only as strong as its labeled data. It can struggle to spot new breach vectors or subtle deviations from normal behavior patterns. For example, if a system relies too heavily on supervised alerting, it may miss a novel ransomware payload.

Unsupervised Learning

Unsupervised learning looks for anomalies in unlabeled data. This is key when defending large networks where potential threats may not fit into any known category. Think of it as watching for unusual account usage without needing a rulebook.

Unsupervised learning is powerful for network security, surfacing potential security incidents and novel attack vectors buried in complex network traffic. Tools like Splunk often use this approach.

The tradeoff? More detection noise. Because it’s not trained on known outcomes, it might flag benign changes like a new software rollout as risks. That can slow response unless paired with human judgment or a fallback signature-based system.

Reinforcement Learning

Reinforcement learning thrives in fast-moving situations where attackers are always changing tactics. These machine learning algorithms learn by trial and error. For example, the model might test different containment actions on a suspicious file until it learns which one minimizes impact. Capture the Flag (CTF) exercises often serve as real-world testbeds.

Reinforcement learning is valuable for:

- Automated threat response: Systems adapt in real time to contain breaches without waiting for human input.

- Firewall tuning: These models learn from traffic patterns to continuously refine rules, reducing manual adjustments.

- Optimizing network risks: By learning from successes and failures, they help rebalance security resources toward the most vulnerable points.

Real-World Benefits of Using ML in Cybersecurity

Enough theory. Let’s talk about what this actually does for you when the alerts start piling up. All the talk about ‘intelligent algorithms’ is useless unless ML changes the operational reality for your team.

When you do this right, ML isn’t a science project. It doesn’t deliver six different “benefits”—it solves two fundamental problems: it finds the signal in the noise and moves faster than humans.

As a result, your engineers focus on what matters. Implemented correctly, machine learning delivers the following benefits:

- Relieves Pressure on Overloaded Teams: ML catches exploit vectors earlier to reduce attacker dwell time. That frees up engineers to focus on strategic initiatives instead of chasing alerts. For instance, one global org saw a 12x improvement in mean time to resolve by using AI in their defense units.

- Faster Response: Learning systems find potential threats in network traffic before they escalate. This shrinks the window of response and reduces breach impact. With platforms like Darktrace, responders can act before the attacker completes their move.

- Scalability Without Team Growth: One of AI’s biggest advantages is scaling defense efforts. Instead of hiring more analysts to watch dashboards, ML models filter malicious files automatically, even during high-volume events.

- Reduced Dwell Time for Attackers: By learning baseline behavior and flagging subtle shifts, Intelligent algorithms reduce the time attackers stay hidden. Over time, it uses past data to refine its ability to predict outcomes with increasing precision.

- Automation of Repetitive SOC Tasks: From spam filtering to alert triage, ML takes on the tasks that drain human attention. This helps reduce notification fatigue and reduces the risk of missing potential security incidents.

- Continuous Improvement With Evolving Threats: Unlike a static detection engine, smart systems transform cybersecurity by adapting to new vulnerabilities. ML improves as it processes more operational data.

But let’s be clear: these results aren’t automatic. You don’t just plug in an “AI box” and solve security. These outcomes are the reward for getting the implementation right. Get it wrong, and you just create a different set of problems.

Challenges and Risks of Machine Learning in Cybersecurity

Here’s a bad situation: Your team just rolled out ML-driven threat detection, expecting a step change in your security posture. Instead, your blue teamer is buried in alert fatigue, and now regulators are circling with audit questions about model explainability. Missed anomalies pile up because telemetry is spotty. Attacker tactics evolve faster than your supervised learning pipeline can adapt.

Adversarial Machine Learning

Adversarial ML lets attackers feed crafted inputs to subvert detection models. Poisoned data or subtle evasion tactics can bypass anomaly detection, leaving endpoint security exposed to zero-day attacks and insider threats. You might patch the obvious, but hidden gaps still lurk unless models are actively retrained and threat hunting never stalls.

“Garbage In, Garbage Out” Data Issues

Inconsistent logging or flawed telemetry breaks behavioral analytics before it helps. Incident response systems falter, causing missed intrusions and unreliable DDoS protection. If your SIEM integration doesn’t enforce high-quality, normalized data, even the most advanced machine learning models will return false insights and lower your security automation ROI.

Alert Fatigue from Overly Sensitive/Poorly Tuned Models

Poor tuning floods SOCs with low-confidence alerts and blue teamers drown in notifications. Without regular supervised learning updates, models lose touch with real-world threats, hampering effective network traffic analysis and vulnerability assessment.

Insider Threat Blind Spots

Sophisticated behavioral analytics can still overlook slow, subtle internal risks. For example, you might miss gradual data classification violations or credential stuffing attempts if anomaly detection baselines are outdated.

Explainability and Audit Pain

Black-box inference leaves compliance teams frustrated. When regulators ask for reasoning behind key intrusion detection or incident response decisions, you could struggle to make machine learning’s logic transparent.

Cloud vs. On-Prem Gaps

Machine learning models tuned for cloud security excel at ephemeral workloads, but hybrid or legacy SIEM integration often creates loose ends. Without careful mapping, your defense in depth crumbles across mixed architectures.

When to Invest in ML

Whether or not you invest in machine learning comes down to the same criteria we use for any strategic capability: needs, resources and readiness.

For complex environments handling massive volumes of cyber threats, ML can help build adaptable defense systems. When information is plentiful, artificial intelligence can reduce dwell time to reduce incident costs.

Investment also makes sense if ML can integrate seamlessly into SOC workflows like correlating alerts and enriching automated threat detection. Mikko Hyppönen has emphasized that hackers are using AI tools, but defenders can gain the advantage by using AI more strategically.

On the other hand low-complexity environments often gain little from heavy AI deployment. If cyber risks are minimal, simpler tools may be enough for classification machine learning tasks, such as sorting benign and malicious samples. In these cases, over-investing in ML can add unnecessary complexity.

Cutting False Positives

Detection noise erodes trust and overwhelms analysts who should be focusing on real vulnerabilities. To reduce it, tighten confidence thresholds to suppress low-certainty alerts. For instance, tuning thresholds can help security teams suppress low-confidence alerts.

Use Explainability and Confidence Thresholds

Clear explainability in ML outputs helps teams separate normal behavior from anomalies. Confidence thresholds allow intrusion detection systems to suppress low-certainty signals. For example, if a system flags unusual login activity with only 40% confidence, it can suppress the flag for later review.

Pair Machine Learning With Rule-Based Safeguards

Combining ML in cybersecurity with traditional security rules offers a safety net. If an intelligent algorithm flags an event but protocols confirm no violation, that indicator can be deprioritized. This hybrid approach can maintain coverage across diverse security data sources.

Mapping to MITRE ATLAS for Validation

Referencing MITRE ATLAS mapping ensures anomalies uncovered through anomaly detection correspond to known attack behaviors. By integrating ATLAS tactics into data collection and validation processes, data scientists and security engineers can filter noise without undermining recognition. Human review and human expertise remain critical to refining ML outputs. Or as Katie Moussouris puts it, “No AI can replace fresh human perspective, especially in high-pressure security moments.”

Beating ML-Powered Attackers at Their Own Game

In 2022, researchers identified a polymorphic malware strain targeting European energy infrastructure. The malware altered its code with each infection attempt, making it difficult for traditional defenses to contain. Attackers are training machine learning models to subvert security operations.

Adaptive Tactics

The ENISA Threat Landscape 2024 reports a rise in AI cyber threats, from deep learning-based phishing campaigns to input data poisoning. These tactics make it harder to identify unusual sequences because they mimic activity the system already trusts. The goal is often to overwhelm defenses with clutter.

Turning the Tables With Testing and Intelligence

Defenders can use adversarial testing through MITRE ATLAS, simulating attacker behavior against machine learning defenses. This lets teams validate with quality data. Tools like Metasploit make it possible to replay breach scenarios and adjust ML models until they consistently flag malicious actions while ignoring safe anomalies.

When done well, this approach transforms recorded activity into actionable insight. It lets teams keep pace with evolving algorithms in an increasingly intelligent battle space.

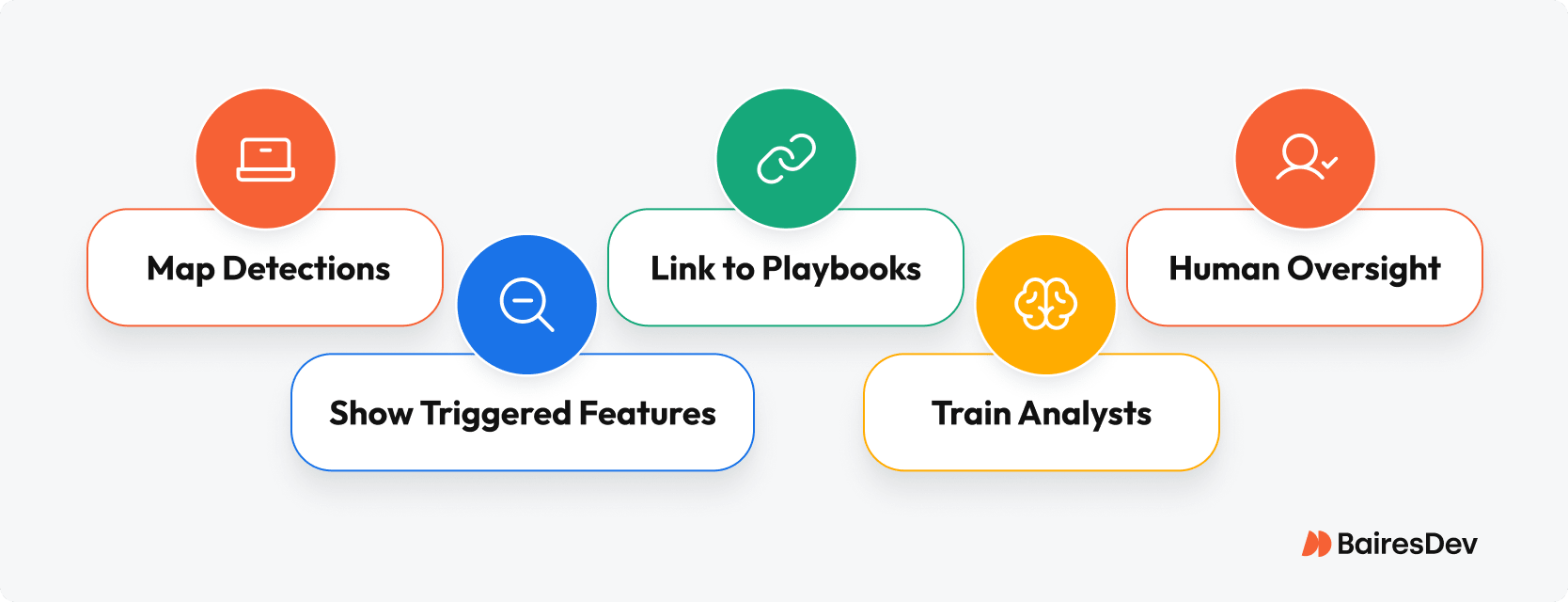

Deploying Explainable ML

Trust grows when your team knows why a system flagged a vulnerability. Otherwise your security team will second-guess it. If an alert includes the indicators that triggered it, you can verify the threat more confidently.

- Map Detections to Known TTPs: Align ML models with MITRE ATT&CK so every flagged event connects to recognized attacker activity. This helps security operations contextualize notifications within broader cybersecurity strategies.

- Show Triggered Features in Alerts: Present the exact indicators from supervised learning or anomaly detection that caused an alert. Transparency in analyzing data turns abstract findings into actionable threat intelligence, reducing uncertainty and unnecessary repetitive tasks.

- Link to Playbooks for Response: Pair each signal with a pre-defined mitigation pathway. Whether addressing risks flagged by artificial intelligence or from identify patterns analysis, playbooks keep responses consistent and efficient.

- Train Analysts on Reading ML Outputs: Equip teams to interpret outputs from machine learning models. Understanding how algorithms weigh evidence helps notifications guide your cybersecurity strategies.

- Keep Human Oversight in the Loop: Even with advanced automation, human review prevents overreliance. Tools like Darktrace pair AI with human discernment for the best response to cybersecurity threats.High-ROI ML Use Cases

In threat recognition, monitoring logins often surfaces compromise faster than watching the network edge. Shifting from perimeter control to identity-focused signals gives earlier insight into credential misuse and session hijacking. It’s a higher-leverage approach in modern environments, where attackers increasingly operate inside trusted boundaries.

When intelligent engines baseline session activity in Identity and Access Management (IAM), they can quickly spot anomalies even when the traffic looks clean. These systems use labeled datasets to distinguish legitimate variation from signs of compromise. For example, if a user typically logs in from New York but suddenly accesses sensitive systems from overseas, the engine flags the deviation.

Perimeter-focused tools can miss these early warning signs, especially when attackers operate inside trusted environments. Behavioral baselining inside IAM systems helps drain resources and cut down on human error. With platforms like CrowdStrike Falcon, this surfaces subtle risks and mitigates cyber risks before they escalate.

Pairing ML-driven identity monitoring with policy-driven logic adds resilience. Alerts from IAM analysis can be cross-verified against access control policies. This hybrid model offers the coverage of advanced analytics with the clarity of traditional defense tactics, making it one of the highest-ROI moves in modern vulnerability detection.

Minimum Viable ML Defense Blueprint

When standing up a first-phase ML defense, avoid overengineering. Start with a clear, measurable framework that maps directly to exploit vectors and operational needs. The goal is fast wins instead of theoretical elegance.

Prioritize Threat Families

Identify high-impact attack types from ENISA TL 2024, then match each to an intelligent system with a rule-based fallback. This ensures coverage without over-reliance on automation and helps in reducing misfires by cross-verifying signals. Leveraging recorded activity for calibration keeps responses rooted in real-world patterns.

Harden Data Pipelines

Apply NIST AI 100-2 controls to protect against data poisoning. Machine learning relies on clean inputs. Protecting these from manipulation preserves the model’s ability to recognize deviations from legitimate user behavior. This step directly supports more accurate threat detection.

Validate With Adversarial Testing

Run red/blue team drills with MITRE ATLAS to simulate attacker adaptation. Use tools like CrowdStrike Falcon to evaluate how ML responds to evasive maneuvers. Then fine-tune settings to reduce false alarms.

Standardize Explainability

Require every alert to show triggered features and mapped TTPs. This transparency lets engineers assess detection outcomes quickly, aligning system decisions with historical baselines.

Iterate Gradually

Deploy changes in small, measured steps, validating each against ROI metrics before scaling. Incremental rollout avoids overwhelming teams and preserves stability while new machine learning elements integrate into existing workflows.

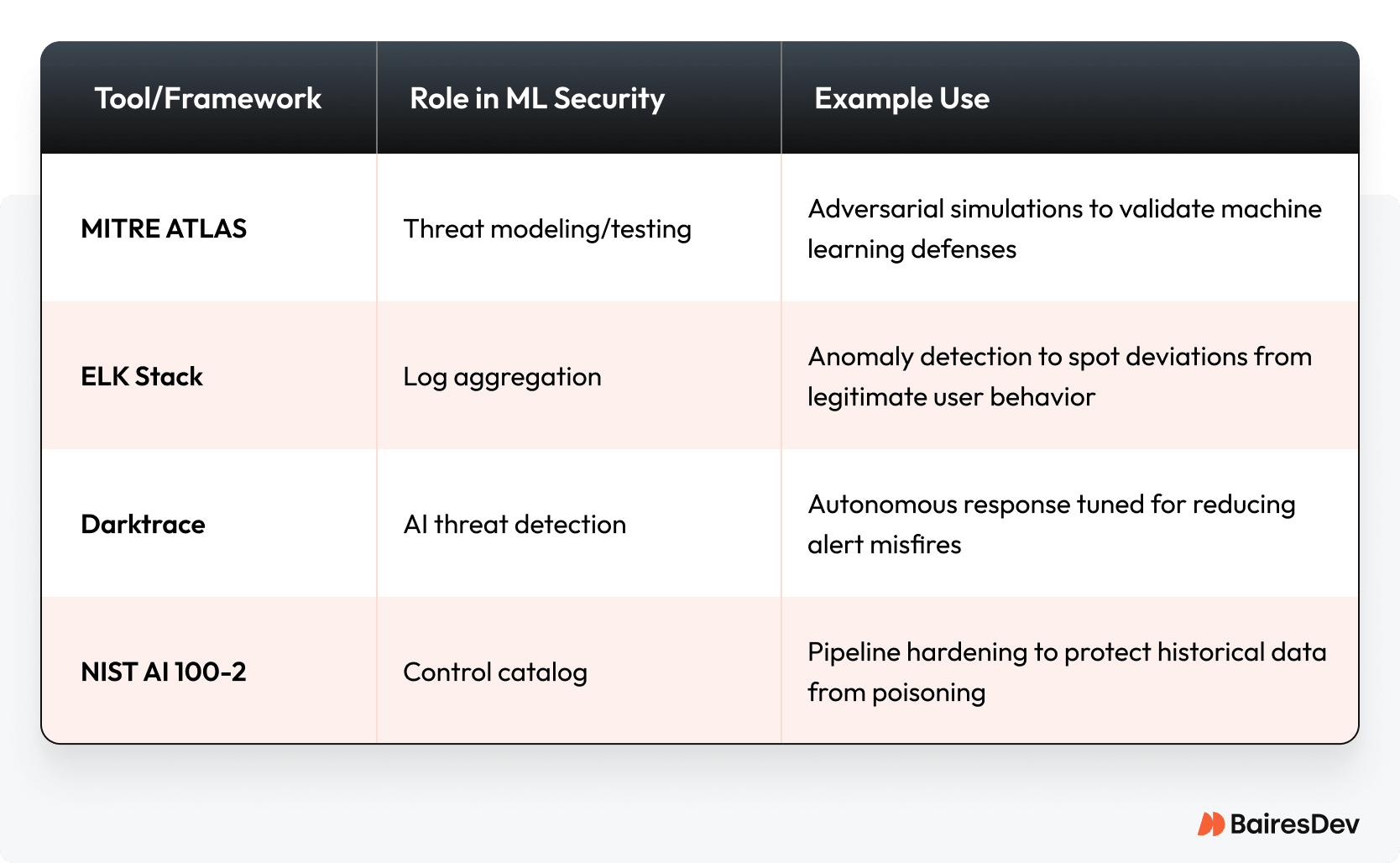

Tools and Frameworks for ML in Cybersecurity

The right technical tools make ML systems more reliable, reinforcing the normal activity patterns they’re trained to recognize. But these frameworks aren’t plug-and-play. They require deliberate integration and oversight.

MITRE ATLAS gives security teams a structured way to test and challenge detection models. ELK Stack, on the other hand, reduces incorrect notifications by enriching them with contextual logs.

Darktrace applies behavioral analysis to spot subtle deviations from established trends, while NIST AI 100-2 creates governance guardrails that preserve data integrity. Together, these tools help ML adapt to real-world environments.

Quick Start Checklist

Start small by deploying machine learning on a narrow set of use cases, then scaling once known good actions are well understood. This approach avoids missteps and preserves trust in the system.

- Start small and specific: Pick one high-impact use case like phishing detection and tune the engine there first.

- Pair ML with known-good rules: Use rules to validate ML outputs and catch what the framework might miss.

- Harden your data pipeline: Protect training data from manipulation. Garbage in still means garbage out.

- Require explainability from day one: If a model can’t show why it made a decision, it’s not ready for production.

- Train your team early: Analysts and engineers should know how to read engine outputs.

- Monitor drift and refresh often: Set a cadence for retraining based on how fast the threat landscape shifts in your environment.

- Define success metrics upfront: Track dwell time and response times to prove value and spot trouble early.

Machine Learning in Cybersecurity: Key Takeaways

Blend automation with rule-based safeguards and human oversight. Tools like Wireshark and endpoint detection systems work best when paired with human expertise, so unwarranted alerts don’t overwhelm the process.

Plan for an evolving AI threat landscape by refining models and integrating new methods without disrupting stability. This balance ensures accuracy and adaptability.

For proven, enterprise-scale ML deployment that aligns with established patterns, partner with experts who know how to fine-tuning firewall rules.

Frequently Asked Questions

How do we prevent model drift?

Regularly retrain models with updated, high-quality information and monitor for shifts in user behavior or attacker tactics.

What’s the ROI timeline for SOC-based ML?

Most teams see measurable gains within 3 to 6 months of phased deployment.

Will ML disrupt our existing SIEM or SOAR tools?

Not if deployed incrementally. Well-integrated AI augments your current stack by automating triage, not replacing it.

Is ML safe for regulated environments?

Yes, with proper controls (e.g., NIST AI 100-2) and logging, ML can meet audit and compliance requirements.