Imagine rolling out an AI system to optimize hiring, only to face a class-action lawsuit because the model filtered out older applicants. That’s exactly what happened to iTutorGroup, who ended up paying $365,000 to settle an age discrimination case after its AI-driven screening process automatically rejected thousands of qualified candidates based on birth year alone.

This is just one example. AI is making critical business decisions in hiring, finance, healthcare, and security, but without strong ethical safeguards, it’s a matter of when problems will arise. And as regulators tighten rules (GDPR, EU AI Act, etc.), those caught on the wrong side of AI bias risk lawsuits, reputation damage, financial losses, and operational setbacks, to say the least.

How do you plan to stay ahead? Whether you build in-house solutions or partner with an AI development company, this guide breaks down the risks and gives you a playbook for deploying LLMs responsibly so your AI strategy is both legally sound and built to last.

AI Bias and the Hidden Risks in Business Applications

Artificial intelligence isn’t inherently neutral. That’s because large language models (LLMs) and machine learning systems cannot form personal opinions. Instead, their judgment reflects patterns in their training data—books, hiring records, financial transactions, legal documents—and that’s exactly where AI bias creeps in.

Therefore, AI bias happens when the data it’s trained on carries discrimination. The model picks up those historical inequalities, applies them to real-world decisions, and ends up reinforcing the same disparities in hiring, banking, law enforcement, and beyond.

That being said, it usually isn’t intentional. Rather, it’s built into the system. And for businesses, it’s a liability too big to ignore.

The Reality of AI Bias in LLMs

Artificial Intelligence continuously amplifies bias over time since once an algorithm learns from flawed data, those patterns persist but also scale, spread, and become more challenging to detect.

So, essentially, the problem isn’t just in the initial training. It’s in how AI models absorb, apply, reinforce, and accelerate those biases in real-world decisions.

Here’s how it happens:

- Bias starts at the data source – AI pulls from historical records that weren’t designed to be fair. If a company’s past hiring data skews toward certain demographics or a bank’s loan approvals favor wealthier zip codes, the AI assumes those trends are standard.

- Flawed patterns turn into decision-making logic – Once an AI system identifies trends, it applies them as if they’re objective rules. So, if certain groups were underrepresented in leadership roles or higher-paying jobs in the past, the AI predicts they’re less likely to be a fit now, even when there’s no real correlation.

- Automation makes bias harder to catch – Unlike human decision-makers who can stop and reassess, AI applies its learned rules at scale. Therefore, while a biased hiring manager may make dozens of unfair decisions, a biased AI model can make thousands in minutes, which can lead to the widespread adoption of discrimination across industries.

Real-World Example: AI Penalizing Women in Hiring

Take Amazon’s AI recruiting tool from 2018, which was eventually shut down. They wanted a system where you’d drop in 100 resumes, and it would pick the top five to hire. The model trained on ten years of hiring data and picked up on a pattern: male dominance in the tech industry. That meant one thing: resumes that mentioned ‘women,’ whether it was ‘women’s soccer team’ or ‘women’s coding club,’ were downgraded.

Industry-Specific AI Bias Risks

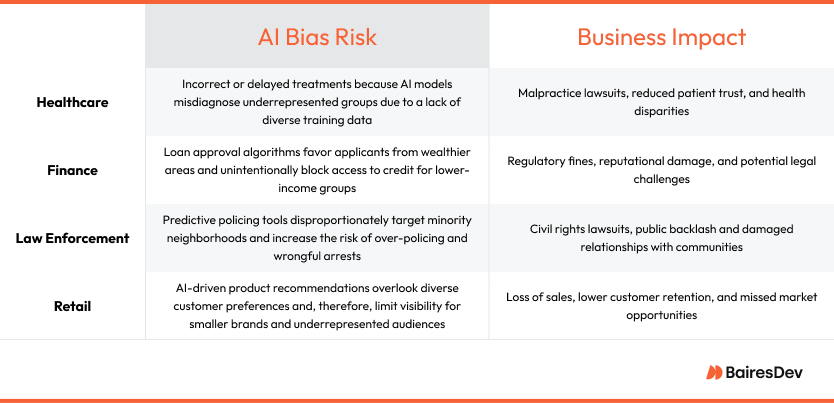

AI bias doesn’t show up the same way in every industry. It plays out differently in finance than it does in retail, and the consequences aren’t one-size-fits-all either. Here’s how it affects different sectors:

As you can start to see, AI bias risks are playing out now, and businesses ignoring them are risking PR disasters and reputational consequences while also setting themselves up for serious legal and financial fallout.

However, aside from improving the technology and tweaking an algorithm, fixing AI is all about tailoring solutions for each industry. At the end of the day, what works for a hospital’s diagnostic AI won’t necessarily apply to a bank’s lending model.

Practical Steps to Reduce AI Bias

Bias doesn’t disappear on its own. It takes a structured and proactive approach. Among the steps you can take to address the issue before it becomes a liability are:

- Audit AI models on a regular basis – Never assume a system is making fair decisions. Run bias checks frequently using fairness-aware machine learning techniques to catch skewed patterns before they scale.

- Expand training data diversity – AI models are only as good as the data they learn from. Therefore, we highly recommend making sure datasets include different demographics, languages, socioeconomic backgrounds, and geographic regions to prevent the system from reinforcing historical discrimination.

- Use Explainable AI (XAI) – A black-box model is a compliance and trust nightmare. Transparent AI makes it easier to spot and correct bias while guaranteeing businesses can justify decisions when regulators or customers ask.

- Establish cross-functional ethics review boards – AI decisions impact finance, HR, legal, and customer experience, so oversight can’t be left to engineers alone. Instead, you could add a dedicated review team to certify that fairness considerations are built into the process.

- Introduce Human Oversight (Human-in-the-Loop Systems) – Leaving decisions in high-stakes areas entirely to an algorithm is risky, hence why firms should integrate human oversight at key decision points. This way, experts would step in when AI-generated recommendations seem flawed or unfair and biases are caught before they impact real people.

- Stress-Test AI with Edge Cases – Although AI models are great at recognizing patterns, they struggle with scenarios they haven’t seen before. That’s why businesses should actively test their systems using adversarial examples before deployment to identify weak spots and expose hidden biases.

Data Privacy and Third-Party Risks in LLM Deployment

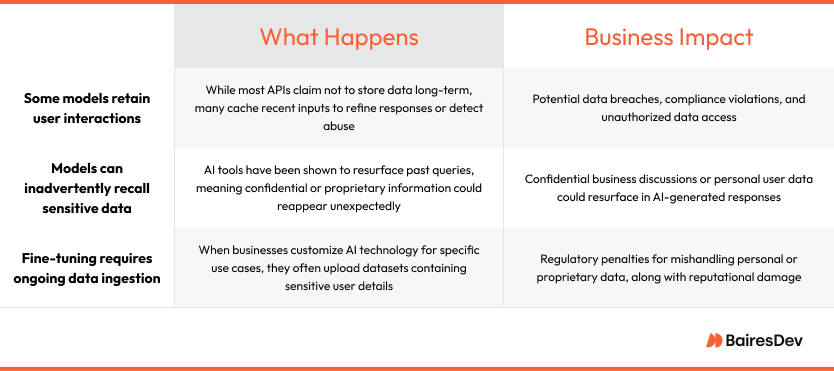

Beyond processing data, AI systems and LLMs can store user interactions, recall confidential details, and sometimes incorporate sensitive inputs into future outputs in unpredictable ways.

Consequently, organizations integrating third-party AI tools, including private companies, public corporations, government agencies, and nonprofits, often lose visibility over how their data is handled. And because of this, compliance, security, liability, and risk management become harder to oversee.

How LLMs Handle User Data

LLMs process text by identifying patterns from vast datasets, including books, articles, online forums, websites, and even code. However, what often gets overlooked is how these AI models handle user inputs after deployment, especially when it comes to data protection and retention.

This means even if your company isn’t directly storing user inputs, the AI provider might be. And without a clear understanding of how data flows through the system, businesses risk privacy violations that they won’t detect until it’s too late.

Photo editing app Lensa is a case in point. Users uploaded photos expecting stylized portraits, only to find out the AI application had stored and used their images for further model training without explicit consent.

That case wasn’t an isolated incident, however. Many other AI models have scraped personal data from the internet, pulled private conversations into responses, and even regurgitated sensitive corporate documents.

Third-Party AI Risks: You Don’t Own Your AI

Most businesses don’t build AI from scratch but plug into third-party platforms like OpenAI, Google Cloud, or Amazon Bedrock. That makes sense from an operational standpoint, but legally, it’s a gray area.

Consider the following scenarios:

- If ChatGPT exposes confidential customer conversations, is the big tech company responsible, or is it your liability?

- If an AI-powered chatbot violates GDPR by storing user inputs without consent, who gets fined—the business using it or the generative AI vendor?

- If a finance firm integrates a third-party AI to automate credit approvals, and regulators flag discrimination in the decisions, who’s accountable?

Just recently, Peloton faced a legal challenge when a class-action lawsuit alleged that its partner and third-party AI chatbot, Drift, didn’t adhere to stringent data privacy standards and intercepted conversations to improve its services. This resulted in unauthorized recording of user interactions and violations of wiretapping laws.

Best Practices for AI Data Security

If you’re a business leader wanting to protect yourself, then it’s all about making smart choices before data becomes a liability. You can’t afford to assume AI vendors will handle compliance for you. Plus, the legal burden of any data mishandling almost always falls on the business deploying the AI, not the provider building it.

Here’s how to minimize exposure:

- Clarify data ownership in contracts – Confirm vendor agreements explicitly state who owns the data generated and processed by the AI tools, for how long the data is stored, and whether user interactions are kept and used for training. Also, establish breach protocols that clearly define procedures and responsibilities if a data breach occurs.

- Minimize data collection – Only store what’s absolutely necessary for AI functionality. The less data retained, the lower the risk of compliance violations or security breaches.

- Vet AI vendors for compliance – Choose providers with clear data retention policies, compliance certifications (GDPR, CCPA, SOC 2), and documented risk management protocols.

- Implement AI governance policies – Establish internal guidelines on how AI is used, who has access, and how to handle compliance risks before regulators ask questions.

- Train staff on AI data risks – Employees should understand what data AI processes, where it goes, and how to flag security concerns because, ultimately, many breaches happen due to human error, not just technical flaws.

- Monitor AI outputs regularly – Businesses remain liable even if AI decisions are automated. Therefore, be sure to conduct routine audits to guarantee outputs meet legal and ethical implications.

The Cost of AI Regulation Compliance

Businesses treating AI compliance as an afterthought are in for a reality check and can potentially get hit hard.

Whether it’s data privacy laws, AI risk classifications, bias regulations, or transparency requirements, you’re required to navigate increasingly strict legal and ethical guidelines.

But how much will it cost to do it right? And what happens if you don’t?

The Expanding AI Regulatory Landscape

AI laws are already here, with regulators tightening the rules on how AI models process data, make decisions, interact with users, and manage security.

Here are some of the biggest frameworks shaping AI compliance worldwide:

| AI Regulation | Focus Area | Enforcement & Consequences |

| EU AI Act | Risk-Based Classification | Higher-risk AI faces stricter rules; potential fines for non-compliance |

| GDPR & CCPA | Data Privacy & AI Usage | Regulates how AI collects, stores, and processes personal data |

| US AI Bill of Rights | Ethical Considerations | Not legally binding yet, but sets the stage for future AI laws |

| UK Online Safety Act | Content Moderation & Safety | Focuses on user protection from harmful automated decisions |

| China’s Generative AI Regulations | Government-Controlled AI | Requires AI to align with government policies, with strict training data controls |

| Social Principles of Human-Centric AI (Japan) | Ethical AI Guidance | Provides ethical principles focusing on human dignity, inclusion, and sustainability |

| International AI Treaty | Global AI Governance | Early-stage treaty aiming to establish worldwide AI regulations |

What AI Compliance Really Costs—And What Happens If You Ignore It

Some companies treat AI compliance like a box to check until they get hit with fines, lawsuits, forced product rollbacks, or public backlash. True, there’s a lot of cost and effort involved in getting it right, but if you really look at the numbers, it’s a much better outcome than dealing with the consequences of cutting corners.

The Cost of Doing AI Compliance Right

Here’s what it takes to build a strong AI compliance framework:

- Hiring AI ethics & compliance teams – This isn’t a one-person job. Rather, assembling a strong AI governance framework requires a multidisciplinary team that brings together:

- AI ethics specialists – Guarantee fairness, bias mitigation, transparency, and responsible AI practices.

- Legal advisors – Navigate evolving regulations like the EU AI Act, GDPR, and U.S. AI guidelines.

- Risk managers – Identify compliance vulnerabilities and design controls to prevent regulatory breaches.

- AI auditors & data privacy officers – Oversee explainability, data security, and algorithmic transparency.

- Conducting third-party AI audits – It’s a good idea to bring in outside experts for bias assessments, fairness checks, explainability testing, and security reviews, either annually or whenever the model gets updated.

- Implementing privacy-first AI models & secure infrastructure – This means designing AI systems that process data without exposing, storing, or mishandling sensitive information. It would involve:

| Privacy-First AI Strategy | Description |

| Federated Learning | Models train locally on user devices or secure servers instead of centralizing data to reduce breach risks |

| Differential Privacy | Adds statistical noise to datasets so AI can learn patterns without storing identifiable user data |

| Zero-Retention Policies | Processes inputs in real-time without logging or storing interactions to prevent leaks |

| End-to-End Encryption | Encrypts AI-generated outputs and interactions so intercepted data remains unreadable |

| Access Controls & Role-Based Permissions | Restricts who can modify, train, or extract insights from AI. This minimizes unauthorized access |

| Secure Model Hosting & Edge AI | Deploys models on on-premise servers or edge devices instead of cloud solutions that store user interactions |

| Compliance with Global Privacy Laws | Aligns AI infrastructure with GDPR, CCPA, and AI regulations to prevent compliance violations |

- Training employees on responsible AI use – Carry corporate training programs on ethical AI practices since it’s a company-wide responsibility. It should include hands-on exercises, real-world case studies, and AI governance frameworks.

The Cost of AI Compliance Failures

AI compliance failures come at a steep price, and impact businesses through:

- Legal fines

GDPR fines for AI-related violations have hit $100M+ in some cases. The European Union AI Act will introduce even higher penalties of up to 7% of a company’s global revenue.

In 2023, the Equal Employment Opportunity Commission (EEOC) settled its first AI discrimination lawsuit against iTutorGroup. The company was accused of programming its hiring software to automatically reject older applicants, resulting in a $365,000 settlement.

- Lawsuits

AI-driven decisions have repeatedly faced legal challenges for unintentional but systemic discrimination.

In 2024, a class-action lawsuit was filed against HR software provider Workday, alleging that its AI-powered hiring tools discriminated against applicants based on race, age, and disability. The plaintiff, Derek Mobley, an African American man over 40 with anxiety and depression, claimed he was rejected from over 100 positions by companies utilizing Workday’s platform despite possessing the necessary qualifications.

The U.S. District Court for the Northern District of California allowed the case to proceed and recognized that Workday could be considered an agent of the employers and thus subject to federal anti-discrimination laws.

- Loss of consumer trust and revenue drops

When an AI system fails publicly, customers walk.

The tenant screening company SafeRent Solutions faced a lawsuit alleging that its AI algorithm discriminated against minority applicants by assigning them lower rental suitability scores. The lawsuit resulted in a $2.3 million settlement, a five-year suspension of the scoring system for tenants using housing vouchers, and, needless to say, an undermining of trust in its services.

How to Stay Ahead of AI Compliance

The most brilliant businesses weave compliance into their AI strategy from day one. Here’s how you can integrate it while staying ahead of regulations and keeping growth on track.

Step 1: Build Compliance into AI Development from the Start

Many organizations build first and worry about compliance later. However, that’s a costly mistake. Forward-thinking industry leaders embed AI governance into the engineering workflow. This way, compliance isn’t an afterthought but a core part of development.

To do this effectively:

- AI teams should work with legal, compliance, and risk management experts early, not just when something goes wrong.

- Compliance reviews should be part of every AI model iteration, embedded in bias checks, explainability, fairness metrics, and transparency standards from the beginning.

- Governance needs to be cross-functional. This would bring together engineers, legal teams, risk management experts, and business leaders to align compliance with broader company objectives.

Step 2: Implement Continuous AI Audits & Risk Monitoring

AI isn’t static, it evolves and compliance risks shift along with it. So, a model that passes a fairness test today could start drifting into bias six months from now.

That’s why one-and-done audits don’t cut it. Instead, you’re better off implementing proactive monitoring strategies that help maintain compliance over time, such as:

| Key Action | Why It Matters |

| Real-time monitoring | Tracking changes guarantees early detection of bias and compliance risks |

| Automated compliance tools | Proactively flag issues before regulators or customers do to reduce legal exposure |

| Explainable AI (XAI) | Transparent decision-making helps businesses justify AI outputs and meet fairness standards |

| Ongoing oversight | Carry out continuous evaluation of AI compliance to stay ahead of risks |

Step 3: Design AI Governance Policies That Scale

As businesses expand AI across multiple functions, governance frameworks that worked at the pilot stage often become inefficient at scale. What follows are new risks, which is why AI compliance policies should be dynamic and evolve alongside regulatory updates rather than forcing costly overhauls each time new legal requirements emerge.

This requires an agile governance structure capable of integrating compliance checkpoints into AI workflows without disrupting development cycles. By embedding automated compliance mechanisms, such as real-time audit logging, risk assessments, and policy enforcement, companies can reduce reliance on manual oversight while freeing internal teams to focus on innovation rather than manual risk assessments.

Similarly, governance frameworks must scale to handle higher data volumes, more complex decision-making processes, as well as increasingly stringent regulatory requirements. That’s while remaining efficient across distributed teams, applications, regulatory frameworks, and evolving AI use cases.

Bottom line: Companies that proactively tackle bias and compliance today are more prone to gain a competitive edge.

The Environmental Impact of AI and Sustainable AI Strategies

Large-scale machine learning models require vast computational resources, draw significant amounts of energy, and generate considerable carbon emissions. So, enterprises scaling AI need to prioritize sustainability instead of treating it like just another regulatory obligation.

Consider OpenAI’s GPT-4. Training this AI model required an estimated 10 gigawatt-hours (GWh) of electricity, that’s equivalent to powering thousands of homes for a year. On a global scale, data centers supporting AI workloads consume 1% to 1.5% of the world’s energy supply, and this figure is projected to increase with the rise of generative AI and real-time inferencing.

But what exactly drives this high energy consumption?

- Training costs – High-performance GPUs (e.g., NVIDIA A100, H100) and TPUs require extensive processing cycles.

- Inference workloads – Unlike traditional software, AI models require computationally intensive inference, which runs continuously across cloud and edge environments.

- Data storage and transfer – ML systems rely on extensive datasets that require petabytes of storage and high-bandwidth networks.

- Cooling – AI workloads generate significant heat, requiring advanced cooling systems to maintain optimal temperatures in large-scale data centers.

How Businesses Can Reduce AI’s Environmental Footprint

Consider the following steps to reduce AI’s energy impact and integrate sustainability into ethical AI:

- Use energy-efficient AI models – Implement model distillation and optimized architectures like Mixture of Experts (MoE) to cut power usage.

- Invest in carbon-neutral cloud computing – Migrate workloads to providers using renewable energy and optimize compute allocation.

- Adopt federated learning – Reduce cloud energy consumption by enabling on-device training across distributed networks.

- Leverage edge AI processing – Minimize data transfer and reliance on energy-intensive data centers by processing AI locally.

- Optimize data storage and transfer – Reduce redundant storage and improve network efficiency to lower energy draw.

Proactive AI Governance

Avoiding legal trouble is only part of the equation. Real success comes from creating AI that’s responsible and sustainable. However, achieving strong AI governance takes clear, structured policies and transparent review boards, along with dedicated AI ethics teams and oversight processes that go beyond surface-level checks.

Don’t wait for regulations to dictate your AI strategy. The most competitive businesses stay ahead by designing governance frameworks that scale while monitoring legal shifts globally and embedding compliance from day one.