Convolutional neural networks (CNNs) are artificial neural networks that mimic the way we see the world. They’re great at finding patterns in pixels, image recognition and computer vision. They’re behind many things we use every day:

- Face recognition: CNNs unlock devices by recognizing your face.

- Self-driving cars: They detect pedestrians and road signs for safer driving.

- Medical imaging: They detect abnormalities in scans for better diagnosis.

In this post we’ll go over the architecture and how they work. You’ll see how the convolutional layer and pooling layer work on images. You’ll also see the real world applications and future of CNNs.

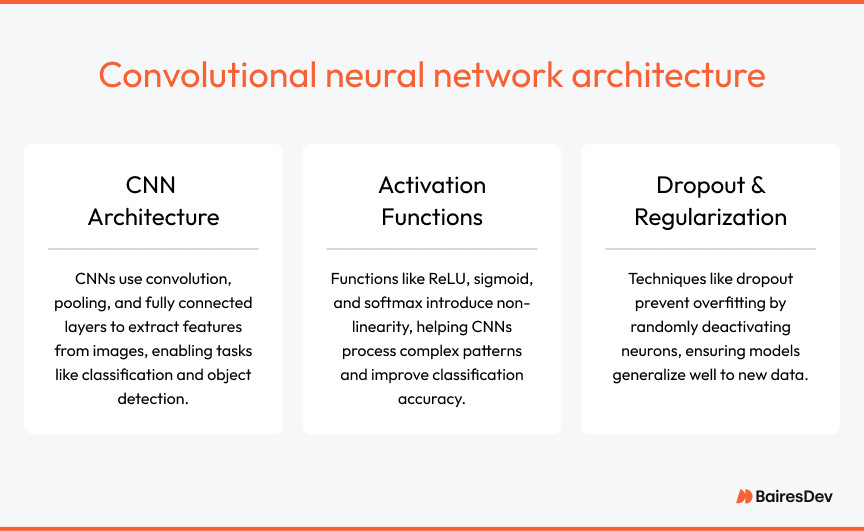

Convolutional neural network architecture

CNNs extract insights from raw data. Multiple layers like convolution layers, pooling layers and fully connected layers extract different features to find patterns. They process edges, shapes and other visuals to do image recognition and classification.

CNN Layers

CNNs process images layer by layer. Each layer builds on the feature map from the previous layer to refine the data, find patterns and create new feature maps.

Convolutional layer

Convolutional layers create activation maps by applying filters to find patterns like edges and textures in an image. An early convolutional layer might find basic features like edges, while a later layer might find shapes and textures. This layer-by-layer approach allows CNNs to process high-resolution data for tasks like object detection and medical imaging.

Pooling layer

Pooling layers work with convolutional layers to handle high-resolution input images. Each pooling layer operates independently to reduce the data dimensions while retaining the important features. Max pooling selects the highest value in a region to retain edges. Average pooling calculates the mean to smooth patterns.

Fully connected layer

A fully connected layer connects artificial neurons and maps the features to classifications. It combines the feature map into outputs for tasks like image classification or object labeling. An activation function like softmax converts these features into probabilities for better predictions.

Activation functions in CNNs

Activation functions like ReLU, sigmoid, and softmax help CNNs process complex patterns in the input data. Each function does something different:

- ReLU (Rectified Linear Unit): Zeroes out negative values to focus on the meaningful features.

- Sigmoid: Converts values into a 0–1 range to process probabilities.

- Softmax: Outputs probabilities for classification tasks.

An activation function is applied after the convolutional layer to introduce non-linearity. They help the model learn complex relationships in the feature map.

Dropout and regularization

Overfitting happens when a model learns the training data too well and captures the noise. This reduces its ability to generalize to new data. Dropout prevents overfitting by dropping certain neurons in the hidden layers during training. This forces the model to generalize better. For example, in the input layer or a hidden layer, dropout prevents any one previous layer from dominating the output. It’s especially useful in a convolution layer where it prevents overfitting by dropping random neurons during training.

Regularization techniques like dropout are important for big datasets. They generalize by balancing the learned patterns with unseen data. This keeps CNNs efficient and accurate in real-world applications.

Hire deep learning experts in your region for CNNs, large datasets, and LLM development.

How CNNs work: step by step

CNNs process the input data in different stages to refine the raw images into outputs. From image preprocessing to feature extraction and classification, every step helps the model to recognize objects.

Image preprocessing

First CNNs prepare the source image to provide consistent data. This involves:

- Normalizing: Scaling the pixel values to a range like 0–1 or -1 to 1 to reduce computational overhead and speed up the training.

- Resizing: Matching the spatial size and dimensions to the input layer requirements. 224×224 is the standard for models like VGG16.

- Augmenting: Applying random transformations like flips, rotations and brightness shifts to simulate real world scenarios and generalize better.

Tools like TensorFlow and PyTorch makes preprocessing easier with built-in functions for different use cases.

| Framework | Preprocessing Features | Use Cases |

| TensorFlow | Includes tf.image for normalization, resizing, and augmentation. | Good for large datasets in research or production. |

| PyTorch | Offers torchvision.transforms for image augmentation and resizing. | Adds flexibility to custom models and experiments. |

| Keras | Built-in preprocessing layers for scaling, resizing, and augmenting images. | Simplifies the workflow for prototyping models. |

| OpenCV | Extensive image manipulation tools for resizing, cropping, and filtering. | Useful for real-time applications like object tracking. |

| FastAI | High-level wrappers for data augmentation and preprocessing. | Speeds up preprocessing for deep learning tasks. |

Feature extraction

Filters in the convolutional layer scan the input volume to find patterns. They extract features at every step to build the feature map. An early convolution layer might detect basic features like edges. Deeper layers capture more complex relationships.

For example:

- Early layers detect edges or corners.

- Intermediate layers combine these into shapes or textures.

- Deeper layers interpret more complex features like a car or a tree.

In practice, a 3×3 edge detection filter scans the pixel values in a 9 pixel grid. It highlights a boundary and creates a feature map for the next stage. The pooling layer retains the most important features and discards the rest. This helps the model to generalize across different datasets.

Classification

The fully connected layer converts the final feature map into probabilities for the output layer. This ties the learned patterns to the labels.

For example:

- A CNN trained on wildlife might look at an image and assign 95% probability to ‘tiger’ and 5% to ‘lion.’

- In autonomous vehicles, a CNN might detect pedestrians, road signs or obstacles.

By connecting all the neurons, the fully connected layer uses all the features to make predictions in the output layer. An activation function like softmax normalizes the probabilities to improve the predictions. This is important for real world applications like facial recognition or identifying tumors in medical imaging.

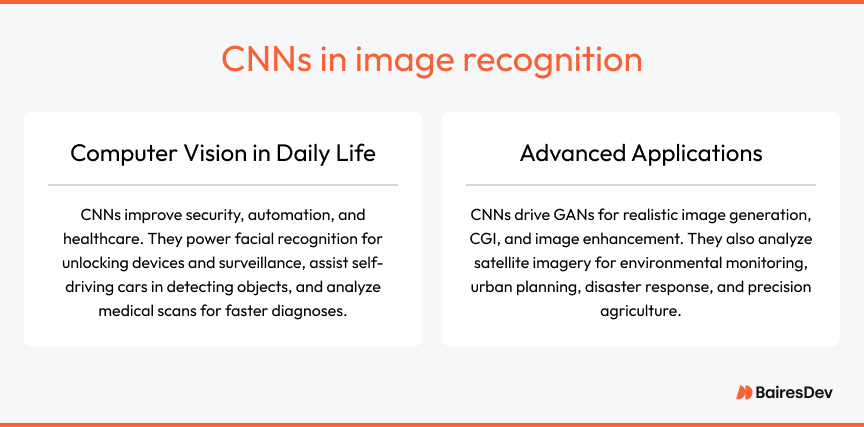

Convolutional neural networks in image recognition

CNNs do dozens of image recognition tasks to power the tech we use every day. Here are just a few examples:

Computer vision in daily life

CNNs unlock devices, do live surveillance and secure access to give us safe and convenient user experience. Let’s dive into those.

Facial recognition

CNN facial recognition has become the new normal of modern security and convenience.

- Unlocking devices: Instantly maps unique facial patterns for a password free experience. Advanced CNNs can adapt to changes like lighting conditions, glasses, masks or facial hair.

- Surveillance systems: Tracks and identifies people in real time, even in crowded environments. High precision CNNs can process multiple input images at the same time so they are reliable in dynamic conditions.

- Access control: Secures facilities by validating identities against a database of approved users. These systems integrate with smart locks and badge readers to provide multi-layered security.

Autonomous vehicles

Self-driving cars use CNNs to interpret the environment.

- Pedestrian detection: Identifies and tracks movement in real time to prevent accidents. Advanced CNNs can analyze an input image to differentiate between pedestrians, cyclists and static objects, even in poor visibility conditions like rain or fog.

- Road sign recognition: Reads and responds to traffic rules to identify speed limits, stop signs and warnings. Robust CNNs can process partially blocked or damaged signs. They help vehicles to adapt quickly to changing conditions.

- Vehicle detection: CNNs estimate speed and angle to avoid collisions and change lanes smoothly in heavy traffic. Self-driving cars use multiple convolution layers to spot patterns like lane markings and road boundaries.

Medical imaging

In healthcare, CNNs can analyze complex scans like X-rays, CT scans and MRIs. They can detect diseases early, find subtle patterns and create insights that improve diagnosis and guide personalized treatment.

| Tech | Applications | Impact |

| X-rays | Fractures and infections. | Faster diagnosis. |

| CT Scans | Tumors and structural abnormalities. | Treatment decisions. |

| MRIs | Brain and organ activity. | Interventions. |

The pooling layer preserves features like tumor boundaries or fractures while summarizing the data to support better diagnosis.

Advanced applications

The applications of CNNs go beyond basic image classification and recognition. Their tech powers drone exploration, generating photorealistic images, satellite imagery and tracking deforestation, glacier retreat and other environmental changes.

Generative adversarial networks (GANs)

GANs use CNNs to generate photorealistic images and videos. They do this by pairing two neural networks: a generator to create the visuals and a discriminator to evaluate their realism. This back and forth process refines the output so it looks like real world visuals.

Key examples:

- Film and gaming: GANs produce CGI effects like the digital recreation of young Luke Skywalker in The Mandalorian. In gaming they create lifelike characters for open world environments like those in Red Dead Redemption 2.

- Super resolution tools: A GAN can improve low resolution images by reconstructing details. For example, they can enhance blurry security camera footage to identify faces or license plates. They can also upscale vintage films for modern 4K displays.

- Medical imaging: GANs generate synthetic scans for training, like rare tumor types in CT scans. Hospitals use these datasets to develop CNN models for faster diagnosis tools.

Satellite image analysis

CNNs are used for satellite image analysis, pattern recognition and tracking with high accuracy. Practical applications:

- Environmental monitoring: CNNs track deforestation, like illegal logging in the Amazon rainforest. They monitor glacier retreat, like NASA’s study of ice loss in Greenland.

- Urban planning: CNNs monitor infrastructure growth, like new road networks in fast growing cities like Dubai. They analyze population density changes to optimize public transportation in cities like Tokyo.

- Disaster response: They use satellite imagery to assess flood zones during events like Hurricane Harvey. CNNs detect earthquake damage in urban areas to prioritize rescue efforts, like in post-disaster Turkey.

- Precision agriculture: They evaluate crop health by identifying drought stressed fields through high resolution input images. This helps farmers in California to manage irrigation and optimize yields.

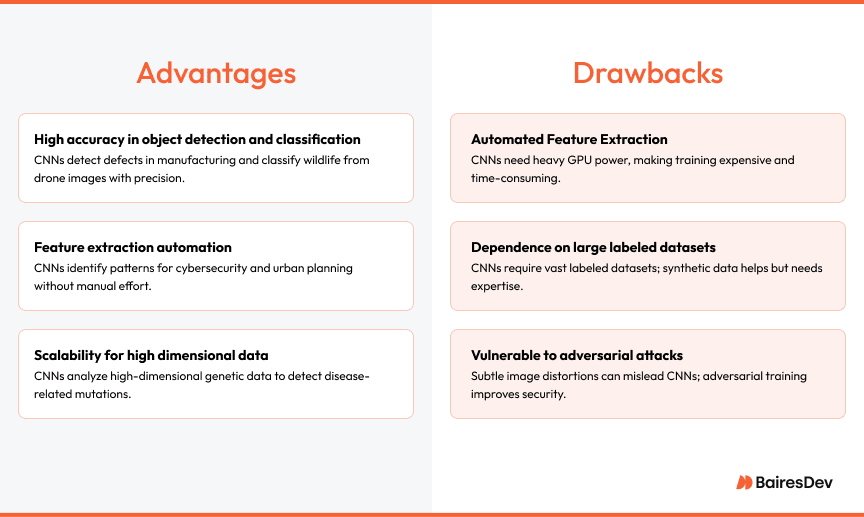

Advantages and limitations of convolutional neural networks

CNNs have changed the way we analyze and interpret source data in images. Let’s look at their strengths and weaknesses in real scenarios.

Advantages

CNNs balance accuracy and scalability in image recognition tasks.

- High accuracy in object detection and classification: CNNs are used to inspect products on assembly lines where they spot defects like scratches or misaligned parts with near perfect precision. In wildlife conservation, they classify species from drone-captured images to track endangered populations.

- Feature extraction automation: Unlike a regular neural network, CNNs learn patterns like textures and anomalies on their own. In cybersecurity, they detect malware by looking at suspicious file structures. In urban planning, they analyze building outlines from aerial imagery without manual effort.

- Scalability for high dimensional data: CNNs are great at handling datasets with thousands of variables, like medical genomics. They can identify mutations linked to rare diseases by analyzing high-dimensional genetic data new insights in personalized medicine.

Drawbacks

Despite the benefits CNNs have some limitations that engineers need to overcome to use them fully.

- Computationally expensive and resource hungry: Training a CNN on high resolution images requires lots of time and GPU power. For example without specialized hardware training a model like ResNet50 can take days. This forces organizations to use expensive cloud resources or partner with a deep learning development company that has access to a supercomputer.

- Dependence on large labeled datasets: CNNs need large labeled datasets like ImageNet to train. This is a challenge when data is limited, such as in rare disease detection in healthcare. Synthetic data generation or transfer learning can reduce the data size but requires expertise and resources.

- Vulnerable to adversarial attacks: CNNs are susceptible to subtle, malicious changes in the source image that can lead to misclassification. For example, image distortions can confuse self-driving car systems. Researchers are working on defense mechanisms like adversarial training to make CNNs more resilient.

Challenges and future of CNNs in image recognition

Convolutional neural networks (CNNs) have changed visual recognition but they still have challenges like energy consumption and bias in datasets.

Current challenges

CNN models consume a lot of energy especially during training. Their high energy consumption comes from complex computations and large datasets. This is operational and environmental cost. Here’s a comparison of energy consumption across popular models:

| Model | Training Energy (kWh) | Carbon Emissions (kg CO2) |

| ResNet50 | 260 | 130 |

| GPT-3 (1 epoch) | 1,287 | 650 |

| BERT (fine-tuned) | 300 | 150 |

Additionally, biases in training datasets can skew results. So, a dataset created with underrepresented demographics could trigger inaccurate facial recognition. To fix biases, researchers curate datasets more thoroughly and use more diverse data sources.

Future directions

New technologies are solving CNN challenges and expanding their use:

- Edge computing: Processes data closer to the source, reduces latency and energy consumption.

- Hardware acceleration: Custom chips like TPUs make CNNs faster and more power efficient.

- Explainable AI: Makes the model more transparent by showing how decisions are made.

- Lightweight CNNs: Simplifies the architecture with fewer parameters for mobile devices.

As these technologies evolve they will change how CNNs are used across industries from healthcare to autonomous systems.

Summary

Convolutional neural networks (CNNs) have changed image recognition, automating tasks like feature extraction and object classification. Their layered structure from input layer to fully connected layer can analyze images with high accuracy.

From facial recognition to medical imaging CNNs have shown their flexibility and robustness. They process high-dimensional input in a way that’s crucial to technologies like self-driving cars, environmental monitoring and AI-driven diagnostics——fields that increasingly demand critical tech skills.

Lightweight architectures and explainable AI are increasing CNN use cases. These will bring more efficient, accessible and interpretable neural networks with big impact in medical image analysis and autonomous systems.

FAQs

What are CNNs used for?

Convolutional neural networks (CNNs) are used for image and video related tasks. They are also used in object identification and natural language processing (NLP). Here are some of the use cases:

- Image Recognition: Identifying objects in an input image, people, animals or landmarks.

- Video Recognition: Analyze frames in videos to detect actions or track objects.

- Object Detection: Identify specific objects in an image and their boundaries (bounding boxes).

- Natural Language Processing: CNNs are used for sentiment analysis but not as much as RNNs or transformers.

These machine learning models can automatically process unstructured data, no need for manual feature engineering.

How are CNNs different from traditional neural networks?

Convolutional neural networks are designed to process grid structured data, like images where pixels are arranged in a 2D grid. Here’s what makes them special:

- Convolutional Layer: CNNs have a convolutional layer that applies filters (kernels) to extract low level features like edges, shapes and textures.

- Pooling Layer: Pooling layers reduce the dimensionality of the data, speed up training and improve generalization. They summarize the presence of features in small regions, which is more efficient than processing every pixel.

- Input Layer: The input layer of a convolutional neural network takes in raw image data, often in the form of pixel values which are then processed by convolutional and pooling layers to extract features.

Unlike other deep learning models, traditional neural networks treat input data as a flat vector. This limits their ability to capture spatial hierarchies in visual data.

What are the datasets used to train CNNs?

CNNs need large labeled datasets for training. Here are some of the most common:

| Dataset | Size | Typical Use |

| ImageNet | 14 million images | Image classification |

| CIFAR-10 | 60,000 images | Object recognition |

| MNIST | 70,000 images | Handwritten digit classification |

These datasets are used to benchmark model performance and have been used to advance CNN research.

Can CNNs be used outside of image recognition?

While Convolutional neural networks are used for image related tasks, they can also be applied to other domains:

- Speech Recognition: CNNs can be applied to spectrograms, transform audio signals into image like representations for classification.

- Text Analysis: CNNs can classify text by analyzing word embeddings or sentence structures as grids. For example, they’re used for sentiment analysis and text categorization. The pooling layer summarizes the key features across different word embeddings, helps the model to detect patterns in text.

Though CNNs are best known for image tasks, their architecture can be applied to other types of grid structured data.

What are the drawbacks of CNNs?

They perform well but…

- Computational Requirements: As a deep learning algorithm, CNNs require a lot of computational resources, especially when dealing with high resolution input images or large datasets. Specialized hardware like GPUs is often needed for training, especially when the model has deep layers like the fully connected layer which increases the computational load.

- Large Data Requirements: CNNs perform best with large labeled datasets. This dependency on data is a problem in fields like healthcare where labeled datasets are sparse.

- Vulnerable to Adversarial Attacks: Small changes to an input image can fool CNNs, make them misclassify objects. Research is ongoing to improve the robustness of CNNs to these types of attacks.

Anyway, CNNs are awesome for image and pattern recognition.