The vast majority of industries are tapping into the enormous potential of artificial intelligence today. It’s clear that AI has become critical for everything from hospital applications and trend forecasting in digital marketing to industrial supply chain management and data science. At the same time, the world is still learning the full extent of the capabilities of this technology.

Although it is a truly transformative tool, AI development does have some limitations and drawbacks. One important challenge is the heightened electricity usage to keep up with the escalating computational power demand. Over time, this problem is creating a snowball effect of environmental concerns like increased carbon emissions.

As AI grows, researchers and developers must strive to lower AI energy consumption through innovations like more sustainable energy sources, improvements in hardware design, and energy-efficient algorithms.

Historical Perspective

In the early stages of AI, models featured a simple design and only required minimal energy for computations. As AI grew—and continues growing—into the behemoth of technology, its energy needs continue evolving in parallel.

Moore’s Law explains that the number of transistors on a microchip doubles every two years, while at the same time, the costs of computers are being lowered by half. This roughly explains the case for the more powerful and energy-consuming AI tools and models.

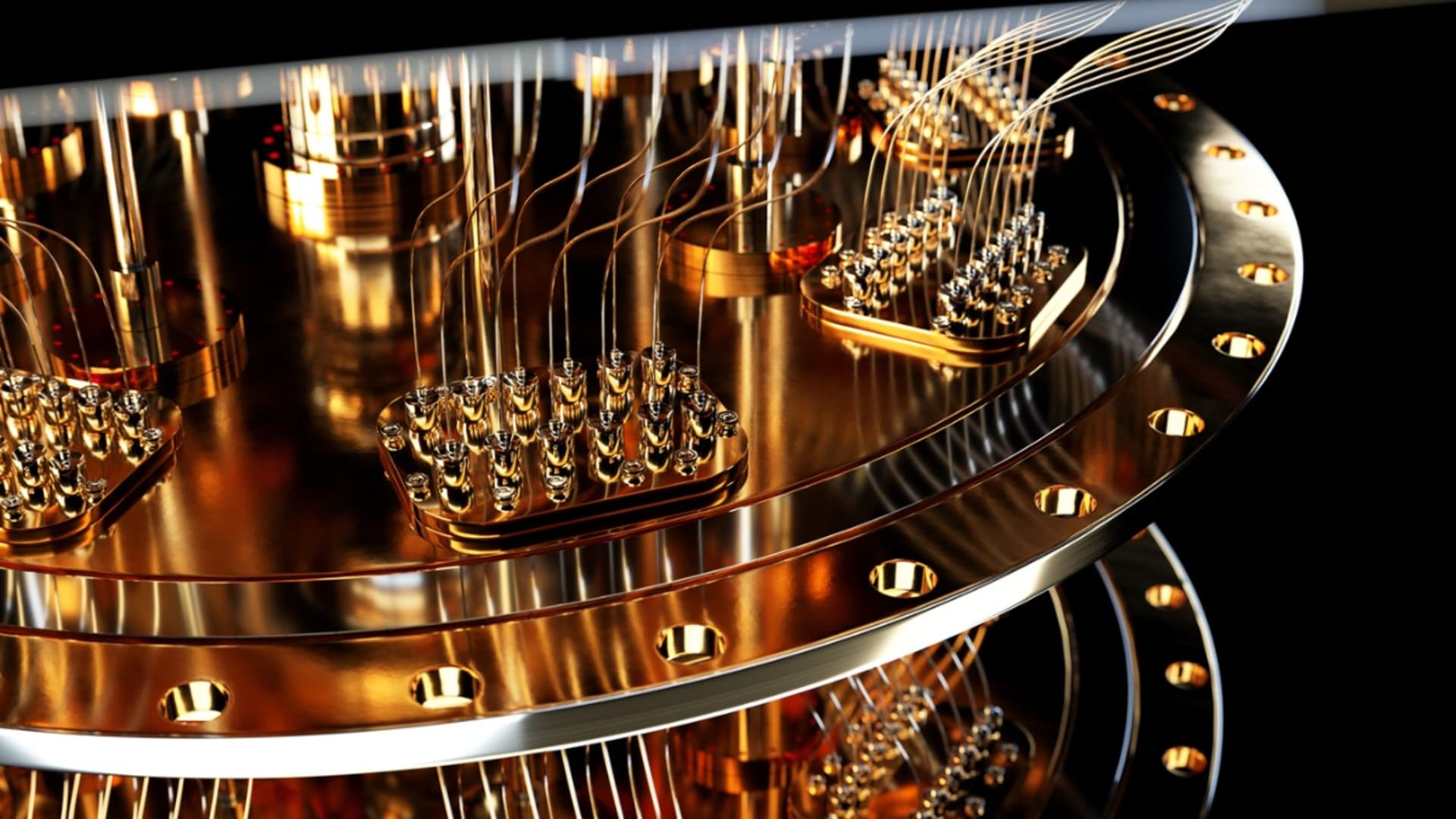

The enormous expansion of AI’s capabilities leads to increases in power demands and the proliferation of data centers. As the backbone for AI computation, these centers feature the servers that store, manage, and process the algorithm or system’s massive amounts of data. This is just one example of the significant amount of energy consumption required to deal with the rising need for power to handle AI’s ever-growing infrastructure.

The Reality of AI Energy Consumption and Its Carbon Footprint

AI promises groundbreaking solutions. However, its enormous amount of energy consumption is already creating an undeniably large carbon footprint. The industry must continue proceeding with caution so as to not tip the scales of innovation and environmental impact to the point of exacerbating the ecological problems of the planet.

What Constitutes AI’s Energy Consumption?

Determining the energy consumption of an AI-based tool is a bit tricky due to the many interconnected components and processes.

Graphics Processing Units (GPUs) and Tensor Processing Units (TPUs) designed specifically for accelerating AI computations are incredibly efficient at their jobs but demand significant amounts of power. In larger models with billions of parameters, like BERT and GPT-4, the systems require extended amounts of training on these TPUs and GPUs, which further increases energy usage.

Additionally, the vast amounts of data used in developing an AI tool and training it, require robust storage solutions that also consume electricity. The process of transferring data between storage and data processing units, known as data movement, presents another point of high energy usage. The combination of the required hardware, storage, data transit, and model training needs of AI creates a hefty energy bill.

Comparison with Other Industries

Although significant, the amount of consumed energy by AI is equally as intense as other industries. For example, training advanced AI models with large neural networks has the possibility of consuming as much electricity as a small city. However, estimates show that cloud computing and data centeres energy consumption will rise to 2967 TWh in 2030, and aviation accounts for a substantial part of global emissions and consumes much more energy.

It’s a common misconception that the digital nature of AI inherently means low amounts of energy consumption. Individual tasks may only use minimal amounts but AI training and employing large-scale models creates major energy demands. As more and more applications of AI pop up in business, it’s crucial to approach it with a nuanced understanding of the energy implications, toward the goal of reducing energy consumption.

Concerns About Energy Consumption

The digital revolution, including AI and machine learning, creates energy consumption concerns in parallel to the advancements in technology. This escalating energy demand and costs associated with running such tech create a heightened urgency to innovate approaches to sustainable AI.

Environmental Impacts

The escalating energy demands of AI create a major carbon footprint and environmental concern due to the reliance on non-renewable resources. This leads to higher greenhouse gas emissions and an exacerbation of global warming.

If left unchecked, AI’s sustained, exponential growth has the potential to greatly contribute to overall global carbon emissions and long-term ramifications like ecosystem disruption, accelerated climate change, and rising sea levels.

Beyond the ecological impacts and looking towards the grander scheme of the world, unregulated and unchecked AI energy consumption poses a threat to future generations’ ability to live on a healthy planet. As AI progresses, it’s important for developers and those working with this tech to strike a balance between incredible technological advancements and necessary ecological preservation.

Economic Impacts

Creating, training, and powering complex AI models is an equally expensive endeavor. The increased electrical demand spikes electricity bills, thus creating a financial burden for companies. Some companies find the cost of training and maintaining AI tools as well as the specialized energy-consuming hardware prohibitive. Smaller companies or startups may even find these escalating costs too much, especially when competing with larger organizations.

If the economic costs associated with AI remain unchecked, the industry could see a slowing in the pace of AI deployments and development. Companies may also choose to less energy-intensive models over groundbreaking innovations simply due to these costs. In the end, the crossroads of AI’s costly energy demands and economics have the potential to reshape the trajectory of AI, for better or worse.

Technological Limitations

As AI grows in terms of capabilities and overall size, the energy needed to train and run these standard and generative AI models grows at an exponential rate. This creates a sobering counter to the boundless potential of the technology, especially in cases of limited energy resources or funding. Unfortunately, this constraining of groundbreaking models creates a ceiling to the vastness and complexity of future AI based on current energy trends.

Furthermore, areas or regions with a more restricted power infrastructure face a bigger challenge. The energy-intensive nature of advanced AI has the potential to create a further technological divide by leaving certain areas of the globe falling behind the worldwide AI revolution. The inability to harness AI’s benefits due to power constraints underscores the need for the innovation of energy-efficient solutions while ensuring that the growth of the tech doesn’t overshadow its accessibility.

Energy Efficiency: Solutions and Innovations

The applications and use cases of AI make it one of the most in-demand technologies on the market today. However, the spotlight must shift somewhat to solutions and innovations in terms of the energy efficiency of the tech.

By shifting the research focus on creating more efficient algorithms and hardware to maximize the capabilities of AI while minimizing power consumption, the industry offers a more hopeful path to move forward with environmental and economic responsibility.

Hardware Innovations

The escalating computational needs of AI call for continual hardware evolution with consideration for energy efficiency. Companies like Google and NVIDIA are already pushing the boundaries of the tech with specialized chips. Google tailored its Tensor Processing Units (TPUs) for high-performance, low-power machine learning operations, while NVIDIA creates energy-efficient GPUs for better-optimized deep learning models.

Many startups and researchers are already working on next-gen AI accelerators while prioritizing energy efficiency alongside performance. For example, neuromorphic computing draws inspiration from the architecture of the human brain and offers promising results in both energy consumption and power. These advancements in hardware help position ASI tech for a future of both incredible performance and efficiency.

Software and Algorithmic Optimizations

The ability to harness the power of AI without enormous energy costs requires ingenious algorithmic and software optimizations via a variety of techniques. One method is model pruning. This technique means that teams “prune” away non-essential or redundant aspects of an AI model to preserve core functionalities while reducing energy demands.

Quantization is another method that helps simplify the numerical precision of model calculations to help them consume less power while running faster and not sacrificing accuracy. Through knowledge distillation, teams train smaller student models to replicate the behavior of larger, more complex teacher models to reduce computational requirements while achieving a similar performance.

Renewable Energy and Carbon Offsetting

Although AI’s energy consumption continues as a problem now and for the future, there are many promising advancements in terms of sustainability. Recognizing the environmental effects of their operations, many companies are choosing greener energy solutions, like solar, wind, and hydroelectric, to power a data center via renewable energy sources.

Major tech names like Google and Amazon continue to adopt renewable energy resources while committing to carbon-neutral goals—or even using AI to help reduce energy use in other areas of their business.

By investing in projects that help reduce or capture other carbon emissions, companies also have the ability to neutralize their carbon footprint over time. Intertwining company growth with advancements in clean energy technology and environmental responsibility, the tech giants of today are already helping to create a world where harnessing AI’s power doesn’t compromise the well-being of the planet.

Conclusion

Artificial intelligence is a technology of enormous, transformative potential at a global scale. However, the advancement of this tech remains tied to its equally vast energy footprint. The growth in terms of both size and complexity of AI raises economic and environmental concerns ranging from the potential economic strains on those utilizing the tech to the carbon footprints of the massive computational needs.

Thankfully, companies in the AI field already recognize the importance of creating a more sustainable future for AI. Via software optimization, renewable energy adoption, carbon offset programs, algorithms and software optimization, and hardware innovations, teams help prioritize the environment along with tech advancements.

The sustainable AI evolution means that companies, governments, and researchers must all prioritize the sustainability factor in the growth of AI to balance its revolutionary capabilities while creating a legacy of global innovation and conservation for the future.