In February 2023, a Hong Kong bank employee attended a video call with the CFO and several recognizable colleagues. He authorized a $25 million transfer as instructed in the meeting, unaware that every other person on the call had been deep-faked. Criminals can now render convincing deepfakes in real time by using counterfeit or stolen IDs.

Gartner analysts predict that by 2026, 30% of companies will lose confidence in facial biometric authentication due to the sophistication of AI deepfakes. This is a significant issue because many financial institutions depend on facial recognition for bank transfers, especially for high values.

Source: Gartner

We can identify solutions and alternatives by exploring software vulnerabilities and deepfakes methods. This information will empower businesses to fortify their defenses against this emerging cyber threat.

From Entertainment to Deception: The Rise of Deepfakes

Biometric facial recognition scans facial landmarks to match a picture to a human. The facial landmarks are converted to numbers, creating a unique facial barcode. The tech then compares the barcode with other facial barcodes in a database, scanning for possible matches. Finally, the software computes a similarity score between possible matches and determines who that face belongs to through the winning score. In 2024, biometrics like facial recognition or fingerprint scans are everywhere. They’re used in airports for border security, in most smartphone screen locks, and to authorize bank transfers.

Deepfakes use AI to render hyper-realistic recordings that mimic the appearance and voice of real individuals. Deepfake creation involves training two neural networks: an encoder and a decoder. The encoder learns to recognize the target person’s facial expressions, while the decoder learns to generate a new face with similar expressions. Deepfakes can seamlessly pull the features of one person over an existing recording or live video.

Deepfakes were initially developed for entertainment to de-age or alter actors’ appearance. Producers used deepfake technology in the film Irishman, managing to deage Robert DeNiro by 30-40 years from the 70-year-old’s appearance. In David Beckham’s anti-malaria campaign, the producers used AI to deliver Beckham’s message in nine different languages. AI deepfakes seem futuristically charming with the protagonist’s permission, but they are controversial for their potential to wreak security and copyright chaos.

In 2023, US actors went on strike for nearly four months, partly to ensure that AI deepfakes didn’t replace them. Perhaps rightly so, since Disney already deepfaked Starwar’s protagonist Luke Skywalker in the prequel The Mandalorian. However, entertainment is far from being the only use case for this. Deepfakes can spread disinformation. Criminals have mimicked authority figures, such as in a fictional 2022 video featuring Ukraine’s President Zelenskyy urging his troops to surrender. In this case, humans flagged the false video, but what about when deepfakes reach a point where they can easily trick software?

Deepfakes and facial recognition systems can clash because the deepfake mimics our facial barcode. Security systems that rely solely on facial recognition must now discern between authentic and altered images. New tech often makes previous software obsolete in favor of higher efficiency, better graphics, and quicker response times. In this case, tech companies must step up to prevent a mass obliteration of biometric recognition tools. They need to identify vulnerabilities and solve them or offer alternatives actively.

Facial Recognition Vulnerabilities Under the Microscope

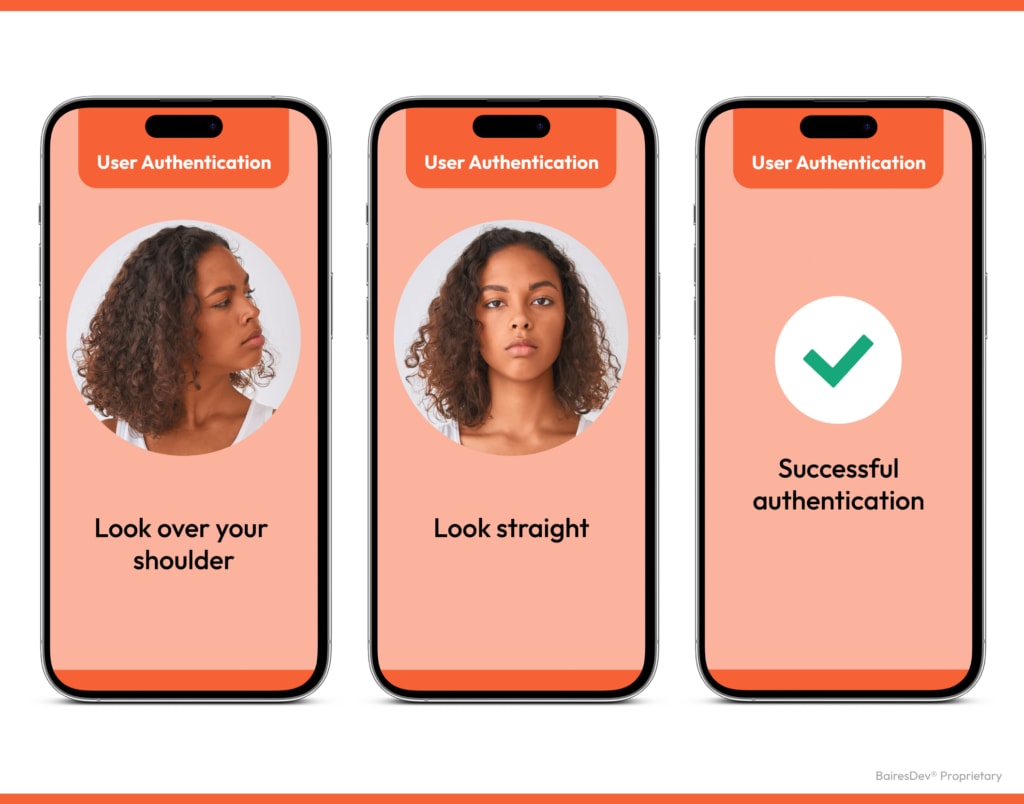

The weapon of facial recognition systems against deepfakes is called Liveness Verification. It can be implemented as software, hardware, or a combination. In many cases, it involves software algorithms that analyze video or images captured by hardware (like cameras or sensors) to detect signs of life and authenticity of the biometric data.

These algorithms scan for abnormal or absent blinking patterns, lip movements, and abnormal lighting. That’s why if you simply hold your phone camera in front of a static image of yourself, it won’t approve recognition. However, a deep-fake video is not static.

In 2022, Sensity, a security company, performed a series of tests on standard facial recognition systems that rely on liveness detection to prevent false logins. In the experiment, the company bypassed the system in 5 steps:

- Create a deepfake of an ID.

- Face swap the deepfake with the ID photo.

- Place the tester’s face in front of the webcam.

- Swap the face of the tester with the deepfake used on the ID in step 1.

- The software performs a likeness and liveness test, matching the tester masked by deep fake tech to the original ID.

It’s a highly sophisticated photo filter. You’ve most likely used filters in a Zoom meeting or Instagram video to alter your image trivially. Filters let you add flattering lighting, banish those dark circles, and spruce up makeup instantly. This technology is similar. It works because the attacker armed with a deepfake filter can move their head, blink, and smile, easily spoofing the liveness check software.

Another vulnerability arises from the ease with which facial data can be collected and replicated. When you walk through a crowded place, your face can be captured by a tourist’s video or a security camera. If uploaded online or stolen in a data breach, this footage could serve as a master key for crafting your digital likeness. Unlike guarded data like Social Security numbers, the ease of sharing photos and videos creates a feeding ground for deepfakes. Public spaces and online profiles become treasure troves for deepfake creators fueled by our digital footprint. We depend wholeheartedly on companies that store video content to safeguard our data.

In Snowflake’s 2024 Data and AI Predictions, specialists highlight that the widespread availability of generative AI will cause an “assault on reality.” Without clear indicators of a video’s authenticity, the population will have to err on the side of caution, assuming it is a deepfake. In a world of unchecked deepfakes powered by AI, both disinformation and distrust will be rampant. And that is a worrisome thought. Governments will need to apply compliance and regulations to quell criminal activity, while software developers must come up with imaginative solutions to beat deepfakes.

Potential Solutions and Alternatives to Support Facial Recognition Software

To mitigate the demise of facial recognition software, we need to fortify it with additional authentication solutions. One potential strategy could be to pit AI against AI. By consistently updating AI models with fresh data on deepfakes, our systems can remain one step ahead of the techniques used by adversaries to create these fakes. Instead of simple liveness tests that measure predictable and imitative patterns like blinking and facial movements, we could create “live captchas.”

A live captcha could be a series of movements or tasks required from the user that a fraudster can’t preempt. Traditionally, a captcha creates a random series of numbers, letters, or images where you need to identify something. It prevents bots from spamming logins by guessing passwords repeatedly. When using facial recognition, the user could be sent a randomized puzzle of movements that a deepfake filter or video couldn’t render seamlessly.

However, this approach can be costly, especially for companies that aim to validate identities for access. Many digital health records can be accessed via facial recognition on the cellphone, but implementing AI or live captchas could increase insurance premiums because someone needs to foot the tech bill. Instead, companies could add additional verification methods when the risks are low. People may require more robust verification methods for larger, less common transfers.

We could effectively pair facial recognition with other verification methods or other biometric verification methods. These could be fingerprint scans, iris recognition, or voice verification. By requiring multiple forms of identification, these systems significantly reduce the risk that a deep fake could successfully impersonate an individual. It’s exceedingly difficult to simultaneously replicate a face while faking a fingerprint. However, users will need adequate hardware, like advanced smartphones, which may not be affordable or available to everyone. Companies face a balancing act. They must ensure security while making their services accessible to a broad customer base, some of whom might lack the necessary hardware.

Blockchain technology, like a digital record book, can help verify where digital media comes from. Blockchain uses distributed ledgers to register blocks of crypto-secure data across a network. The result is a tamper-proof and traceable record. A recent trial conducted by academic researchers combined facial recognition with blockchain technology. They created a secure, fraud-proof, and tamper-proof system for e-voting.

This method was tested with 100 participants and achieved an 88% success rate in initial online voting experiments. The experiment demonstrated strong potential for reliable use in real-world elections. If we pair facial recognition systems with blockchain, we can track how digital content is created and changed over time. This way, we can ensure that the data used for facial recognition is legitimate and reliable.

If you would like to integrate blockchain technology into your authentication methods, experts at BairesDev can help you address this business goal. Complex technologies can be intimidating, but don’t let that be a barrier to adoption. Partner up with seasoned engineers to help you elevate your offerings.

Are Facial Recognition Logins’ Days Numbered?

The World Economic Forum has ranked disinformation and misinformation as the biggest short-term risk in 2024. Behind that analysis is the rise of AI deepfakes. GenAI is already relatively accessible with deepfakes already in the mainstream. For example, in early 2024, over 100 deepfake video advertisements of UK Prime Minister Rishi Sunak appeared as paid ads on Meta’s platform.

The foreseeable future will probably mean everyone must adopt zero-trust policies because of the risks posed by deepfakes. The days of signing in with your face could be numbered. Companies might need to pair facial recognition tech with trailblazing alternatives like blockchain, more sensitive liveness tests, and training AI models to counter malicious deepfakes. If you’re unsure of where to find AI experts focused on security, selected experts in our talent pool are ready to support you with AI development services.

You need to be very mindful of the technology partners you choose, as these solutions can be complex and ramp up costs. Cost-effective ways will become a priority to identify and address vulnerabilities. This ensures that facial recognition services remain accessible to most of the population and prevents smaller tech firms from being excluded. Otherwise, the technology is at risk of becoming obsolete right at the peak of widespread adoption.