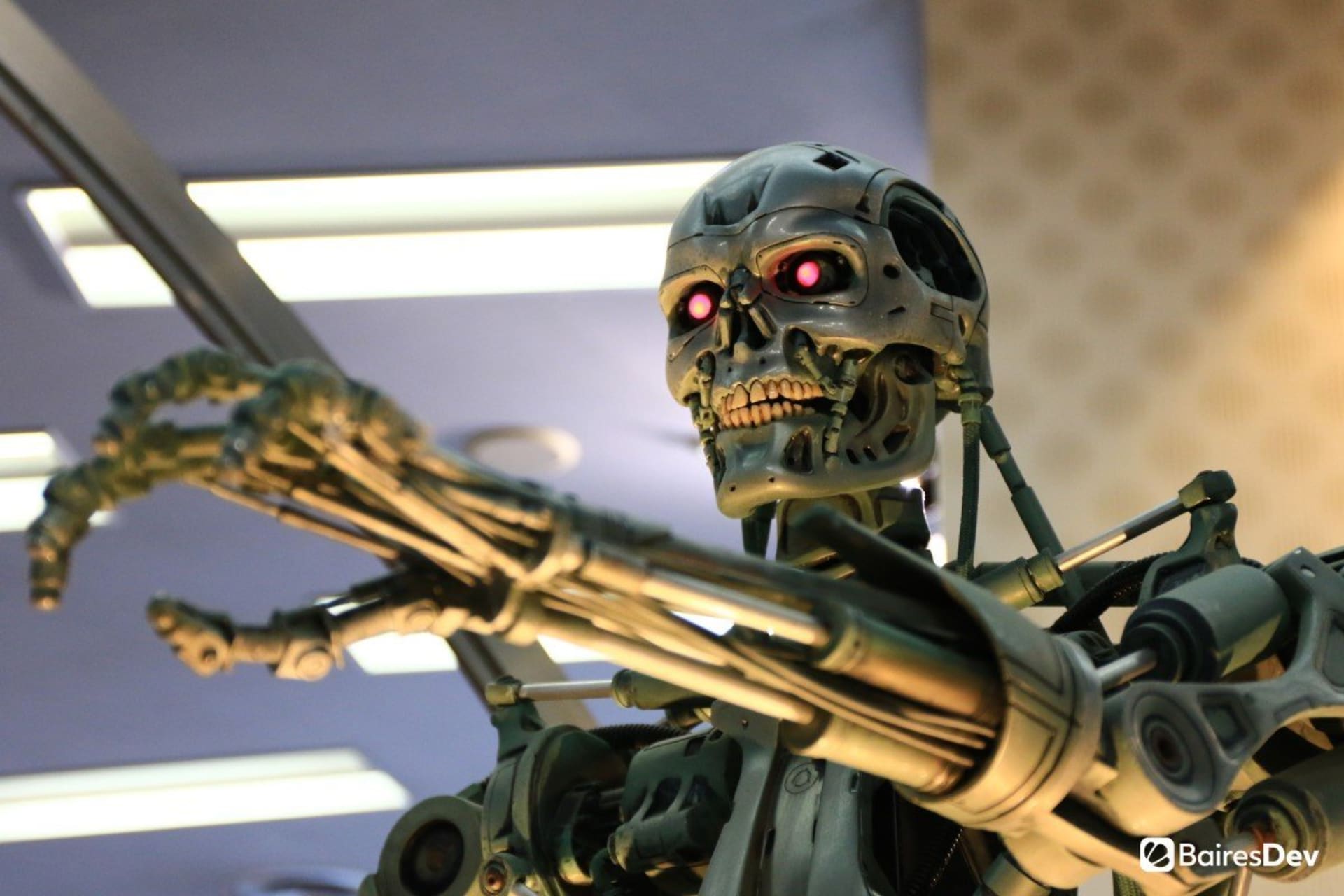

Years ago, when the Terminator first premiered, it seemed like a far-fetched fantasy. Machines coming to life and killing people? That was a nice premise for a sci-fi action movie but not much else. People even laughed at the theory that a machine could even look like a human, let alone talk and think like one.

But now things have changed. Over the last few years, AI has grown at an exponential pace. And people who earlier ridiculed this theory are now getting worried. There is even a name for this sequence of events: it’s called the Terminator scenario. To understand this fear, let’s dive into what exactly is the Terminator scenario and how it’s related to AI and machine learning.

Terminator Scenario

People have been using AI for ages now. It powers automation, overcomes redundancy and obsoleteness, removes human errors, and brings innovation to your current system. AI comes in various types. First, there are Reactive machines and Limited Memory. These are examples of AI that people use for basic purposes and repetitive tasks. This type of AI can perform analysis and reporting after being trained on a large dataset. Companies use these AI for Image recognition, Chatbot, Virtual assistants, etc. Then comes Theory of Mind AI, which can predict your requirements. Many companies such as Amazon and Google use this in their recommendation engines.

Then there are the latter stages of AI, i.e., Self Aware, AGI, and ASI. These are ones that can make their own decisions and the ones people are worried about. And they are the ones that people associate with the Terminator franchise. The movie series shows that an advanced algorithm called Skynet decides to finish humanity, fearing that humans will try to get in its way and eventually destroy it. Such a sci-fi concept illustrates that AI can become powerful and take over the world, turning against humans.

Normally, people are in support of technological revolutions. But this movie brought to light a very critical issue of technological advancement: the potential harm advanced Artificial Intelligence systems can do to us.

So How much of it is true?

Most people believe the Terminator scenario to be just a hyped-up scenario, as there is a negligible probability of it taking place. Right now, the main use of AI is to automate monotonous tasks and perform prediction modeling. AI can perform many tasks like humans, but it’s merely a technological invention and needs human calibration to function properly and effectively. Without human intervention, AI loses its coherence and accuracy.

However, there’s always the lingering question: AI can perform repetitive tasks, but can it take the place of a human mind? The answer is a resounding no (as of yet). AI-derived tools can do their job, but they can’t innovate. AI machines lack the originality or creativity to think ideas out of the blue. Movies and web shows portray that AI will take over the planet. But they play such stints to attain high ratings and OTT credentials, and imagining such things is absolutely wrong, at least today.

The biggest thing is, there might be fundamental issues in creating an ASI like the one shown in Terminator. Doing that would require a huge amount of computing power and resources, the likes of which aren’t yet developed. It’ll also need lots of data, and it’ll need to access them at lightning speeds to make such quick decisions. Moreover, it will need years full of calculations and trials before controlling a physical body.

Most of the time, humans can add ad-hoc instructions for AI execution. That means humans can actually drive the final AI results in the direction they want. AI itself isn’t capable of any bad thoughts. It only chooses them (if it even chooses them at all) because it thinks it’s the easiest way to achieve its objective. Adding ad-hoc paraments can help avoid these problems.

There’s always a but

But mistakes can happen, especially if we are lax about how we use and integrate AI with technology. The main problem is, AI may become too good at its job. Let’s take an example from cybersecurity. An AI system tasked to improve itself might not let encryption algorithms stand in its way to retrieve data. That means cybersecurity all over the world would fail. AI could potentially access all sorts of sensitive information, including nuclear codes – just like in the Terminator movies.

There’s also the issue of how humans will use Artificial intelligence. AI in itself isn’t bad or good – it just is. But what if it’s tasked to achieve something bad? Think about the movie Chappie. The robot itself wasn’t bad, but it killed people just because it was taught to do that. Similarly, facial recognition is used today to track our movements, and social media uses AI algorithms to curate content that might end up influencing the public in several ways. But it’s not the AI that’s doing that – it’s the people operating it that pointed it towards that direction.

AI has already begun influencing decisions. Most people are using online algorithms to choose their routes, flights, and stocks. This raises the question: can AI influence people to hand it what it needs? It might not be able to get what it wants itself, but if it can persuade a human to do it, it’s a problem.

Final Thoughts

As of now, the Terminator scenario seems like an unlikely possibility. AI is a black box, and no one knows which direction it will take if given autonomous control. Thus, the Terminator scenario is inconceivable. But AI can create problems if we use it in the wrong way. However, if we control AI with human intelligence with explicit measures, then the future will be optimistic and nurturing.

Everything has a good and a bad aspect to it. We can’t simply infer that AI will destroy the human world, nor can we establish that AI will benefit humans. Time, research, and our actions with AI will ultimately decide our fate.

If you enjoyed this, be sure to check out our other AI articles.